High Performance SwiftData Apps

My 3000-word apology for killing my iPhone processor

A few weeks ago, I released a new app to the world. Unfortunately, nobody seemed to like it very much. Let’s chalk it up to a rare Jacob Bartlett miss.

But there is an untold story behind the development of the app. A story with nothing to do with my outdated early 2000’s anime references.

A story of SwiftData gone wrong.

And a story of performance pain, of data integrity, and of a successful migration.

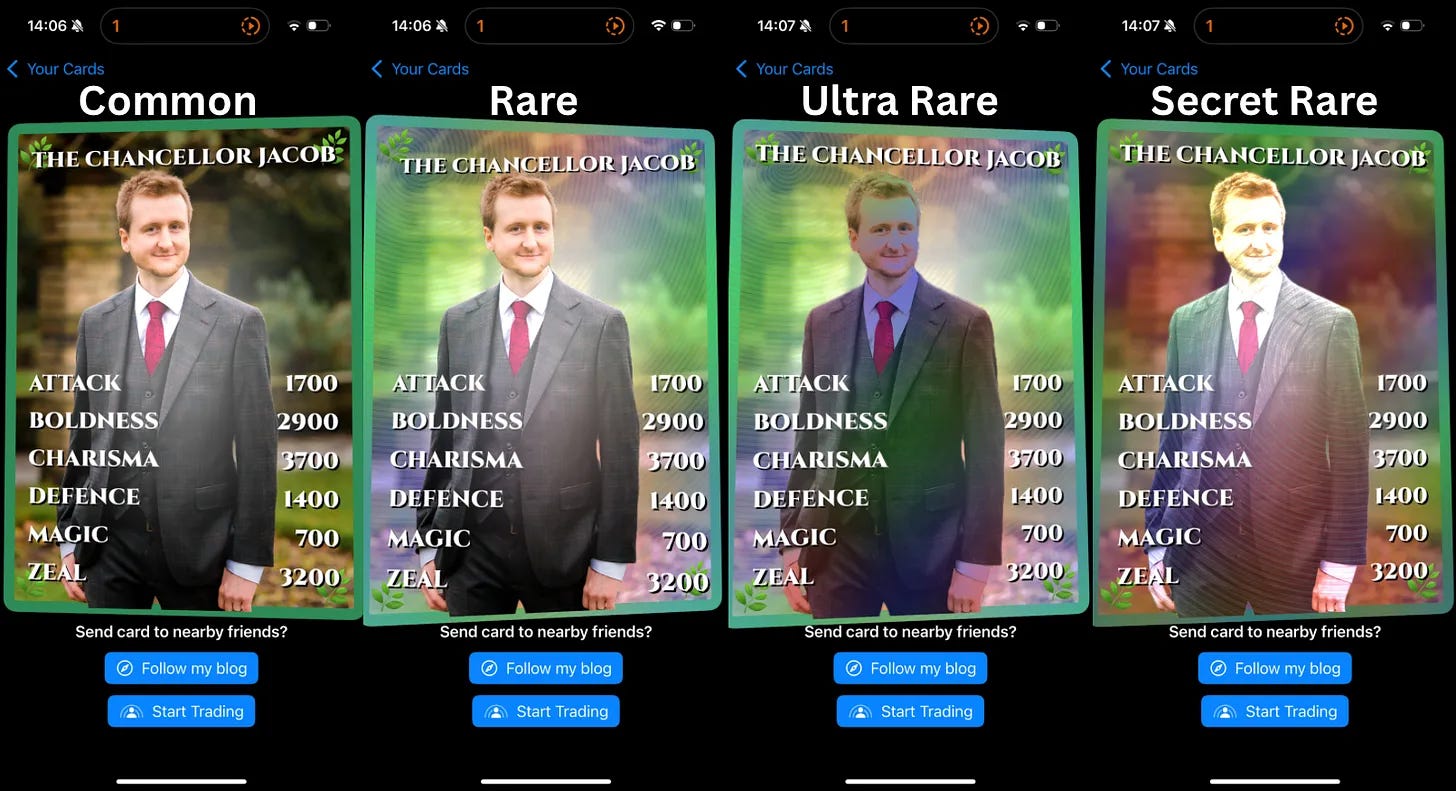

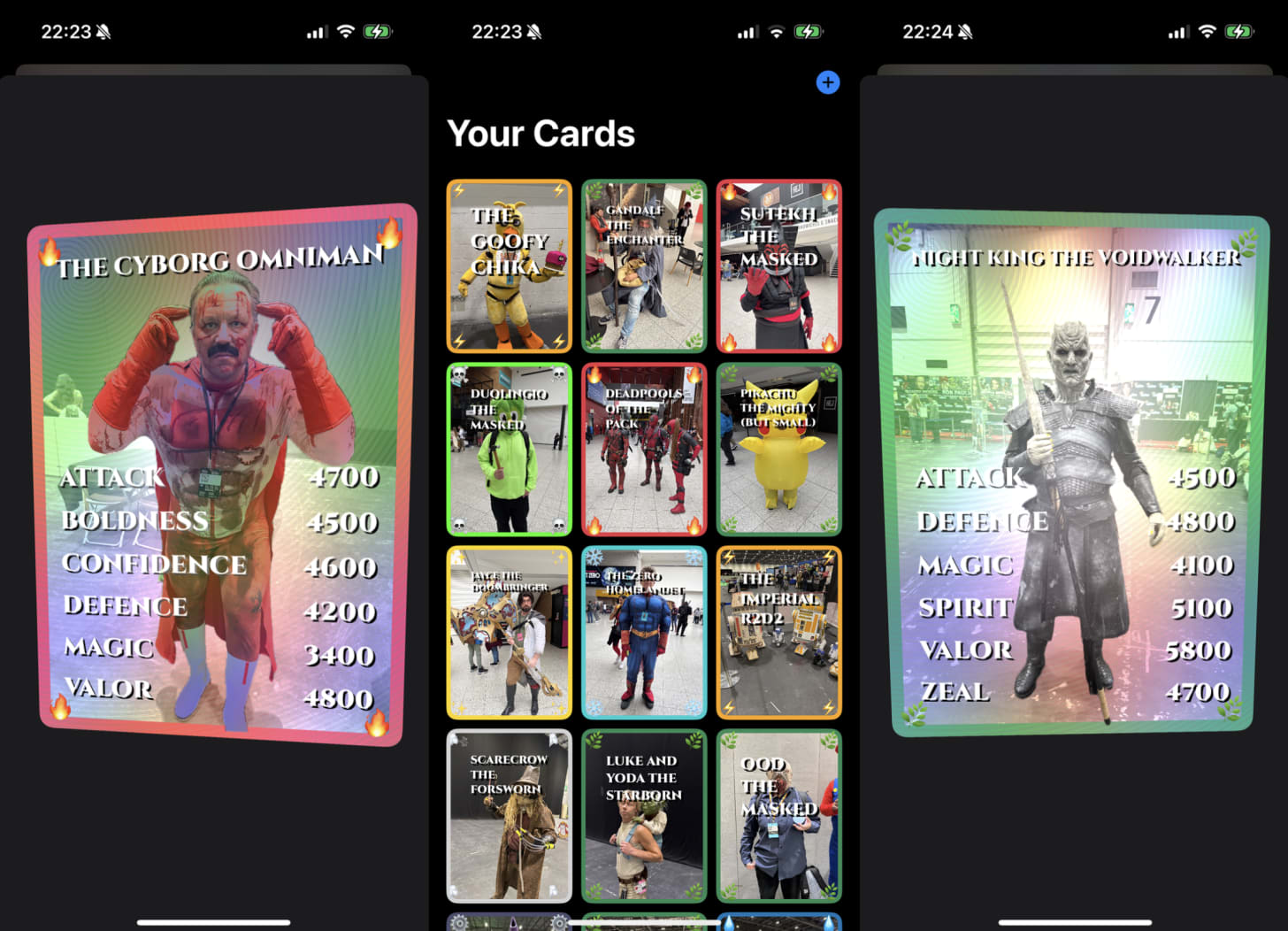

I took my nearly-complete app for a test-drive at London Comic-Con. The app took photos and converted them into Top-Trumps-style trading cards with customised names, relevant stat ratings, and different UI effects based on rarity.

I wanted to collect some cool Comic-Con costumes.

In product terms, the app was a massive success. The cosplayers looked great, the shader effects popped, and my client-hosted AI model was handing out accurate names and stats.

But I had twin problems creeping up…

Every time I saved a new card, the app would get slower.

And slower.

And slower.

My battery dwindled rapidly.

I went into low-power mode.

And slower.

And hotter.

And it started crashing.

And got slower.

You get the idea.

Eventually I was waiting 20 seconds between taking a photo and being able to save the card to my collection. Then it would just crash every time.

Something was very wrong with the way I’d implemented SwiftData.

I got home. I knew I had to fix it.

But I also needed to keep my device safe, otherwise I’d lose my hard-earned Comic-Con collection.

So I had my 2 objectives:

Optimise my SwiftData usage to make my app performant…

…without losing all the existing cards I’ve collected

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every week.

Paid members get several benefits:

Read Elite Hacks, my exclusive advanced content 🌟

See my free articles 3 weeks before anyone else 🚀

Access my brand-new Swift Concurrency course 🧵

This post is pretty long, so it may be cut off in some email clients. Read the full article uninterrupted, on my website.

Diagnosing the Problem

When improving the performance of an app, I usually have a simple 3-step process.

Test on a real device to identify user-facing problems.

Profile the app using Instruments to identify bottlenecks.

Implement code improvements using 1) and 2) as our guide.

This find-problems-first approach avoids bike-shedding, keeping the focus on the real bottlenecks.

But frankly, I don’t have to try very hard to identify the user-facing problem. Just look at this screen recording of me taking a photo of Mario:

That isn’t buffering, that’s a 15-second-long UI hang.

That isn’t Substack’s crappy video compression, those are massive scroll hitches.

Because I’m a good scientist, let’s connect the app to instruments and take another photo just to make sure. Uh, it crashes again.

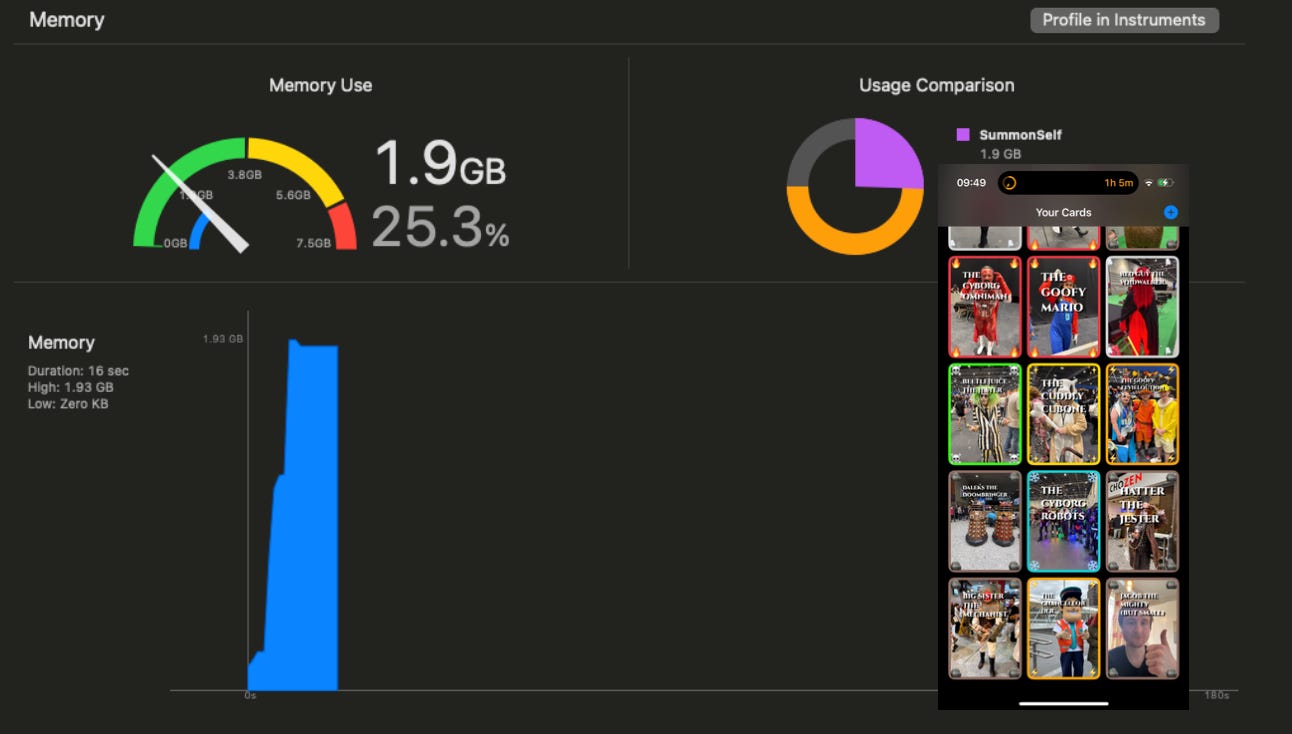

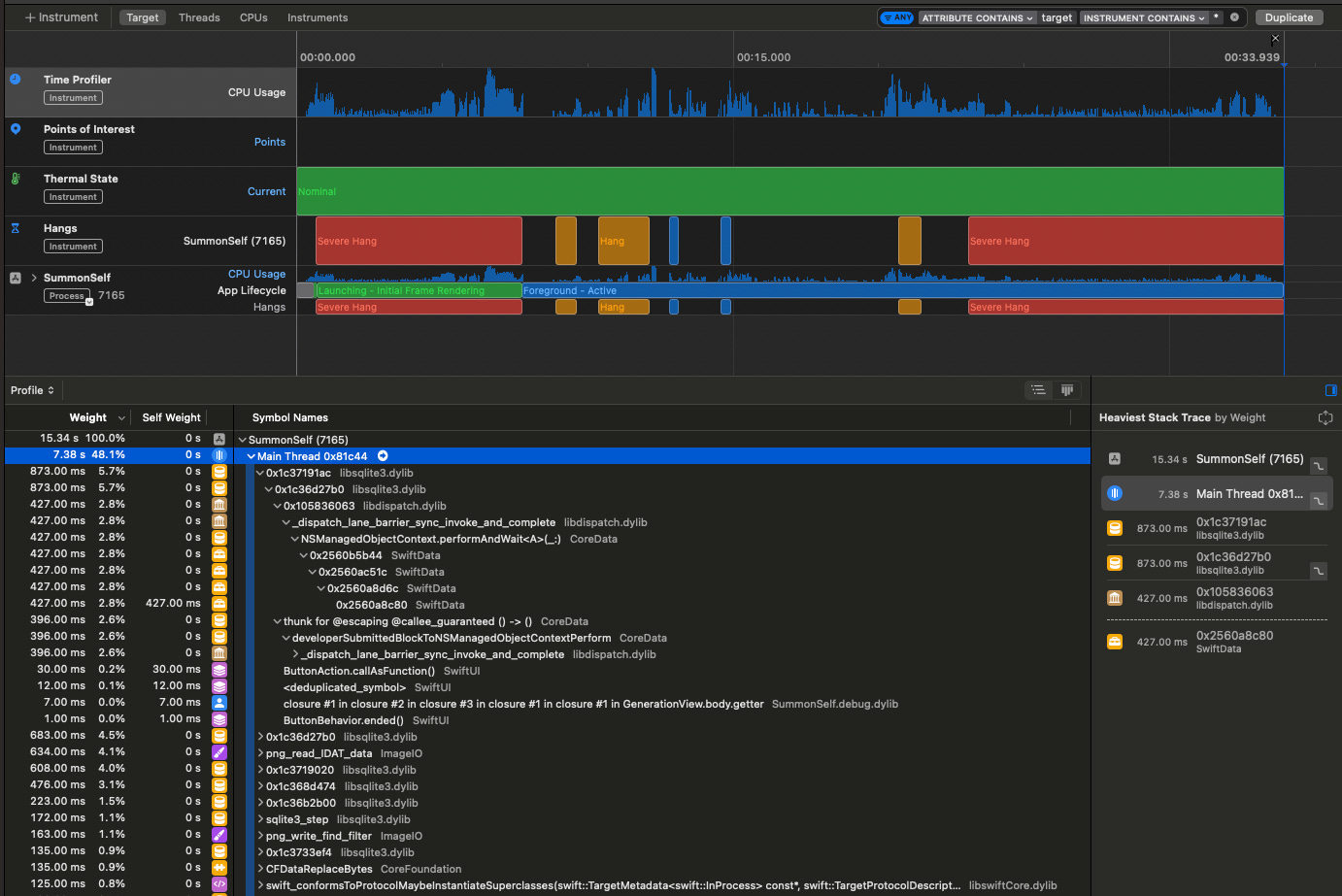

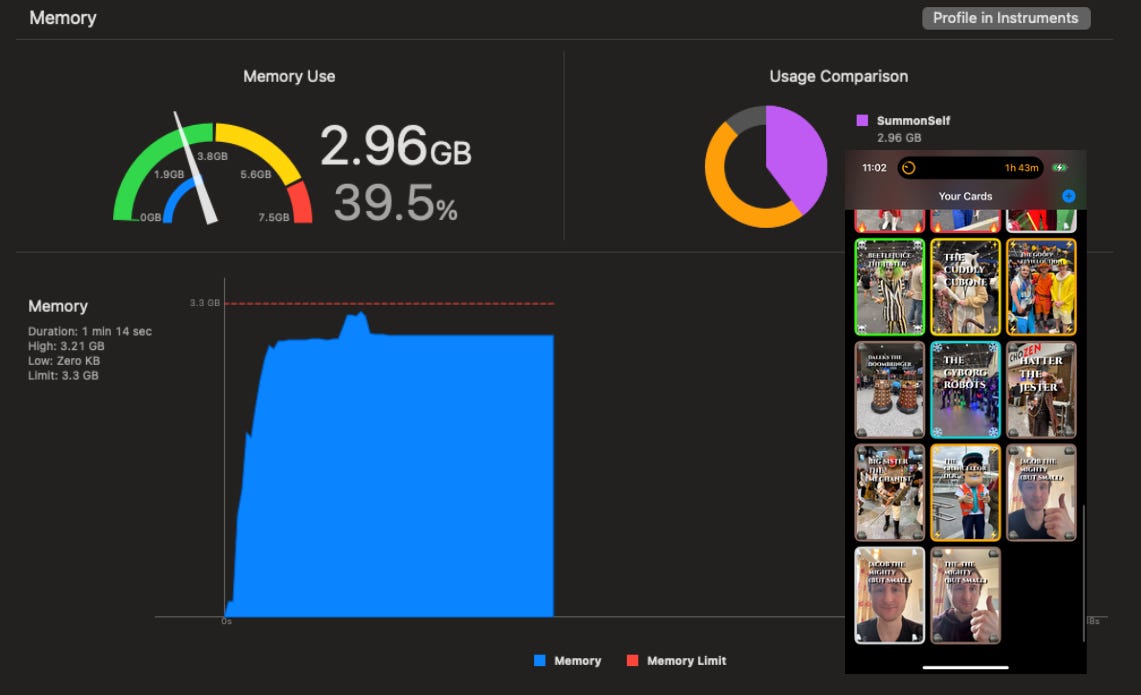

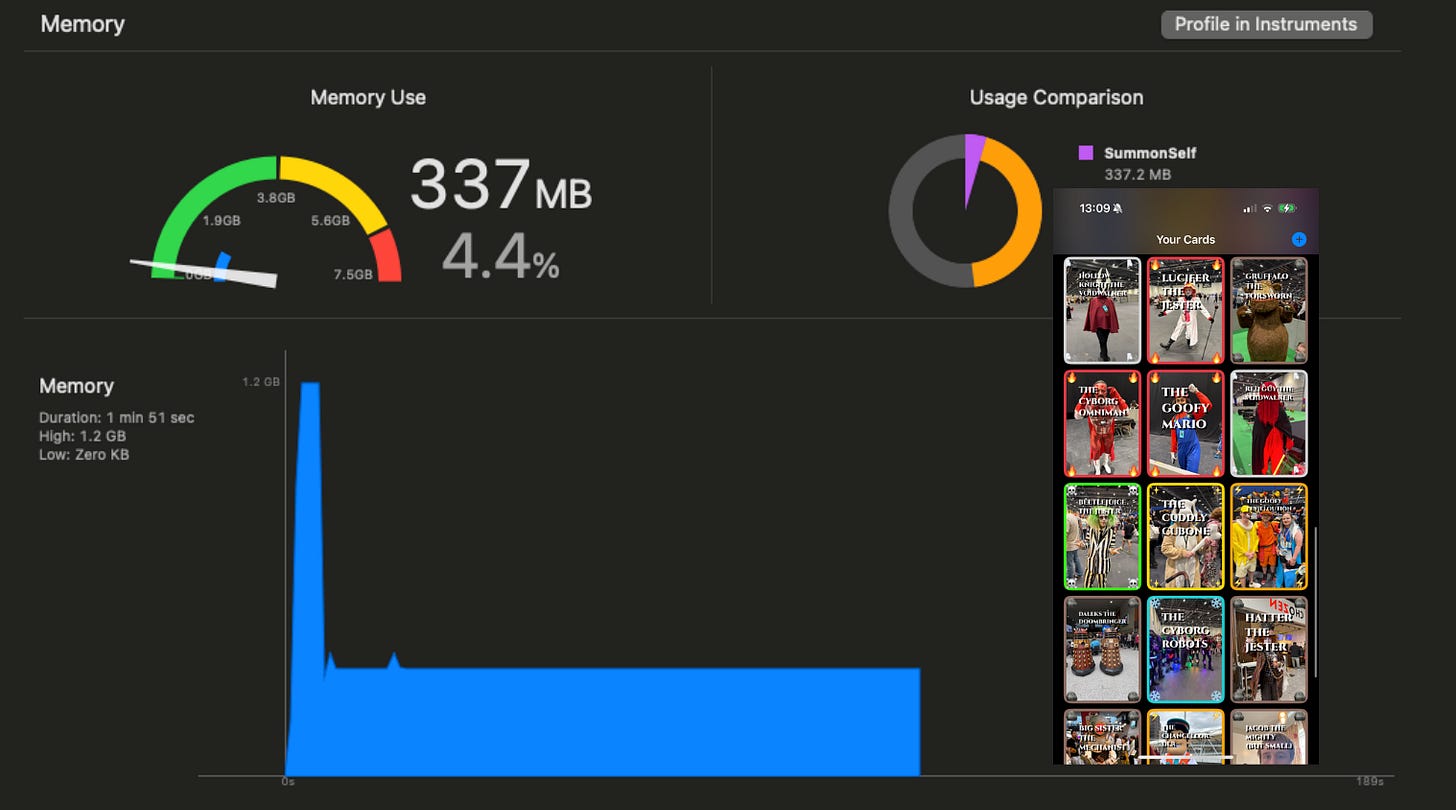

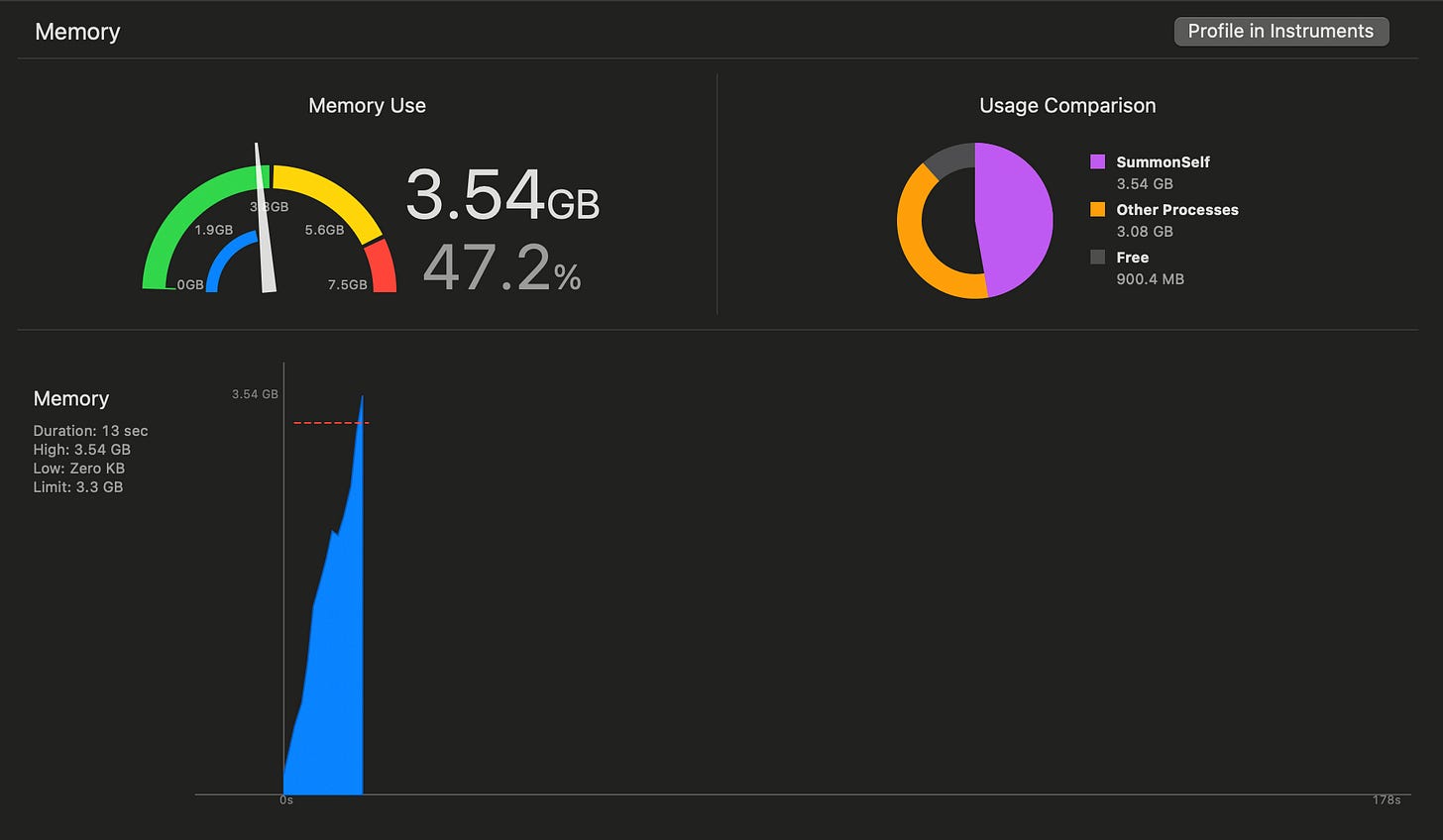

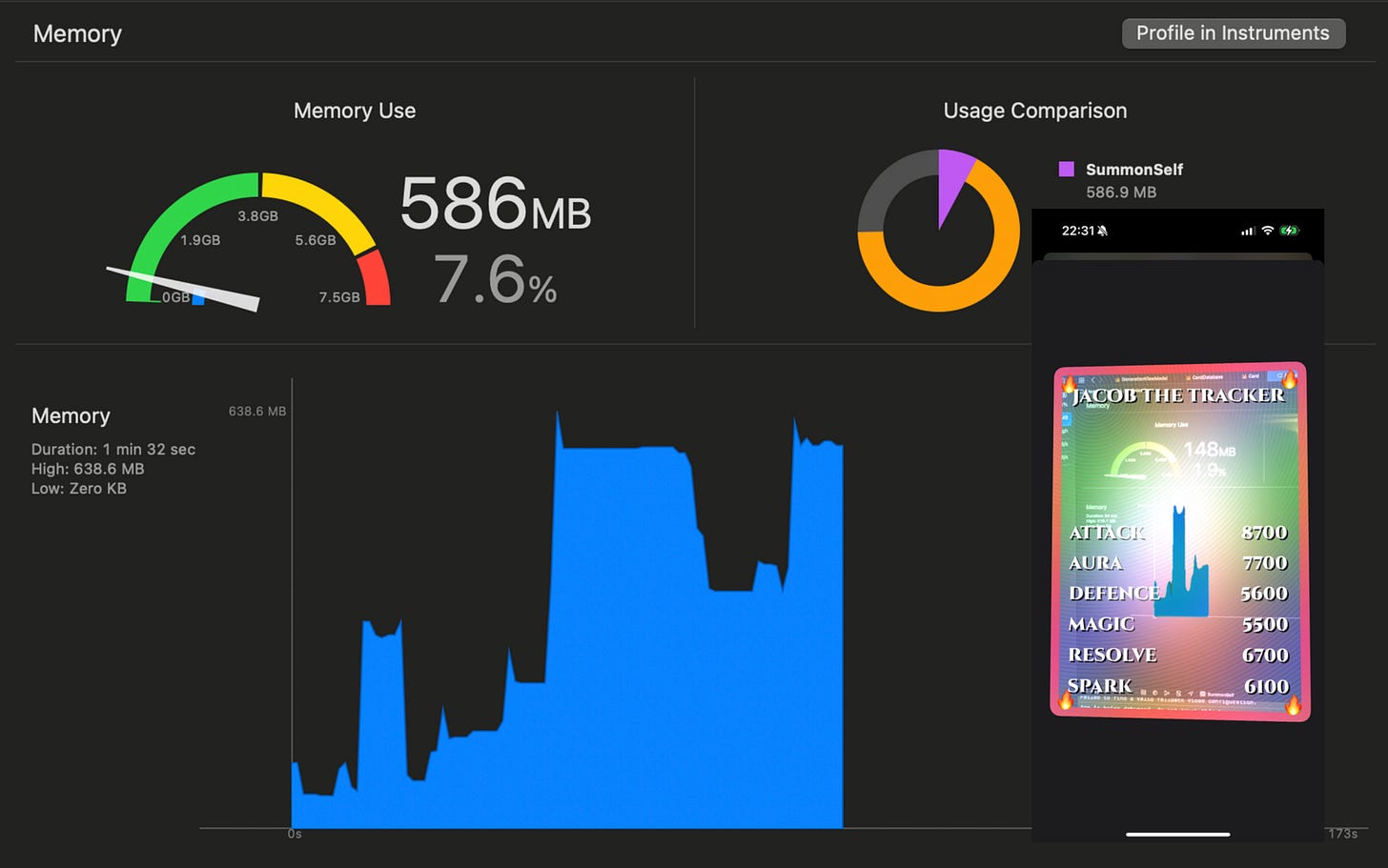

Let’s see what was happening in the Xcode memory debugger.

When loading in my main screen, it’s black for a long while before displaying all the images at once in my LazyVStack. That’s a big spike.

The crash happened during the second big UI freeze, which happened when saving the photo. Memory gradually creeps up during the hang and eventually the system kills the app for being a resource hog.

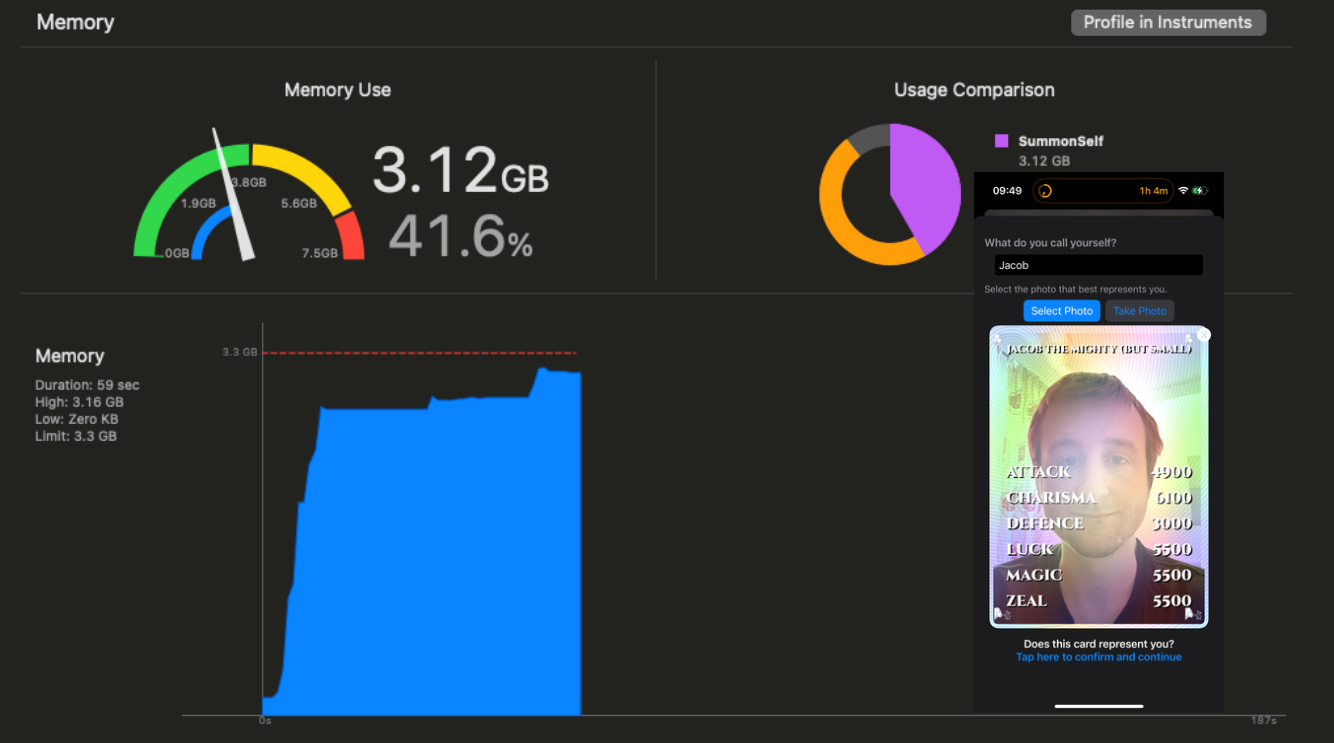

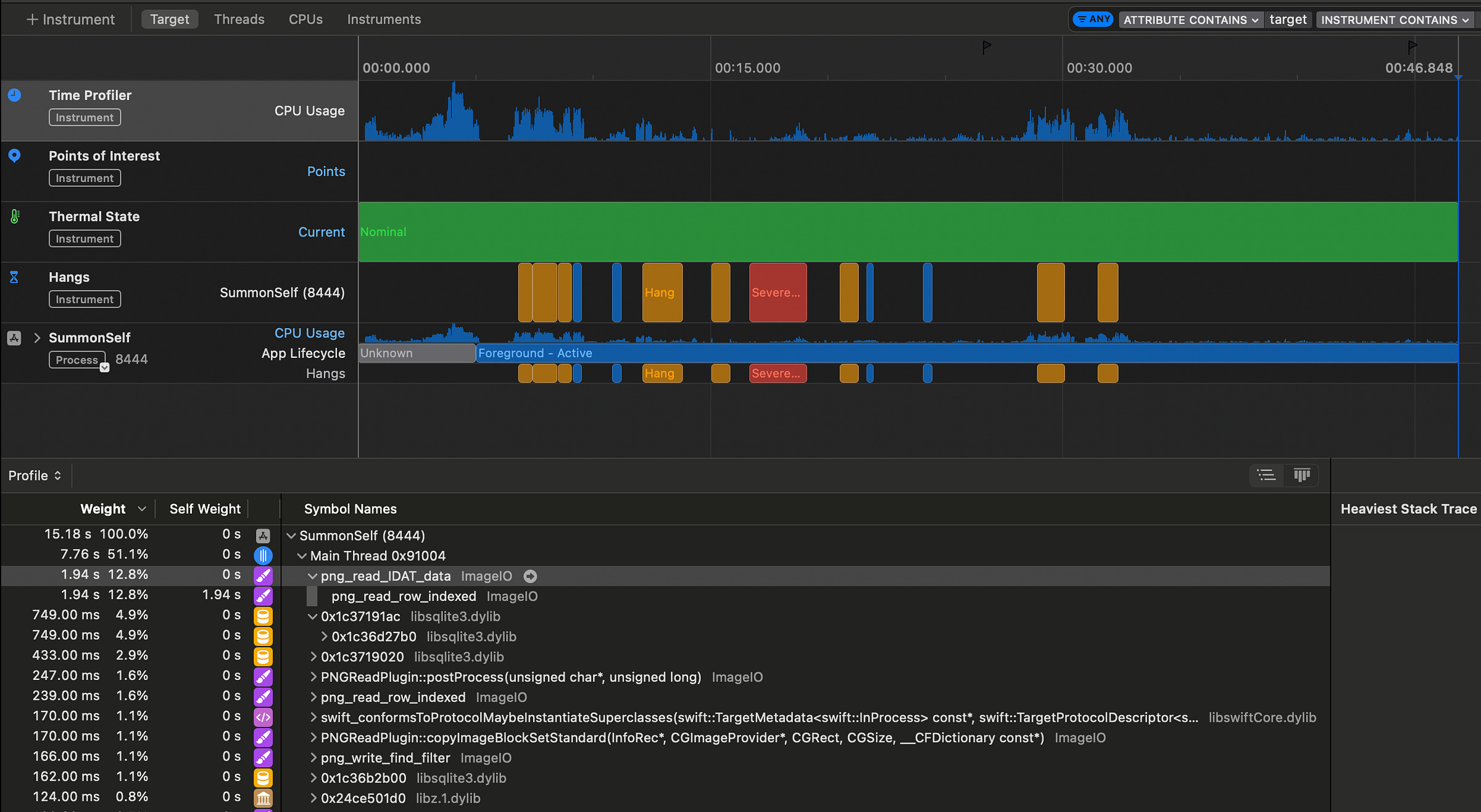

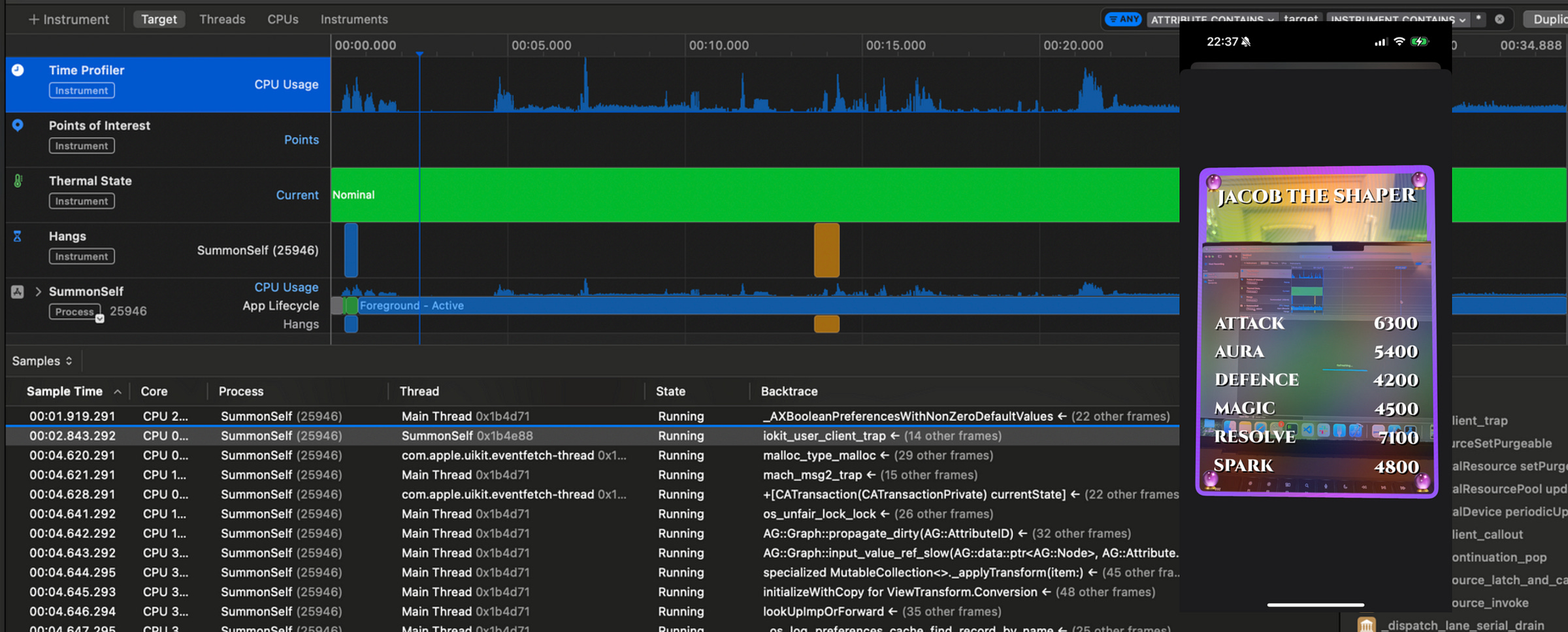

Now, let’s check out the trace in instruments. We can clearly see the huge severe hangs on launch and saving a photo, up until the crash when recording stops.

Looking at the libraries called by the main thread, we see the full SwiftData-to-SQL abstraction pyramid:

libsqlite3.dylib

CoreData

SwiftData

It doesn’t take a genius to realise I’m doing something badly wrong with SwiftData.

Optimising SwiftData

Something was very wrong with my SwiftData approach.

First and foremost, it was performing all its work on the main thread. While we want to present our models in our UI, we don’t necessarily need to perform all the fetching work.

Secondly, I was making a big mistake with my data modelling. Somewhere, I was doing some incredibly heavy work; enough to push the system past its memory limits.

Let’s get started.

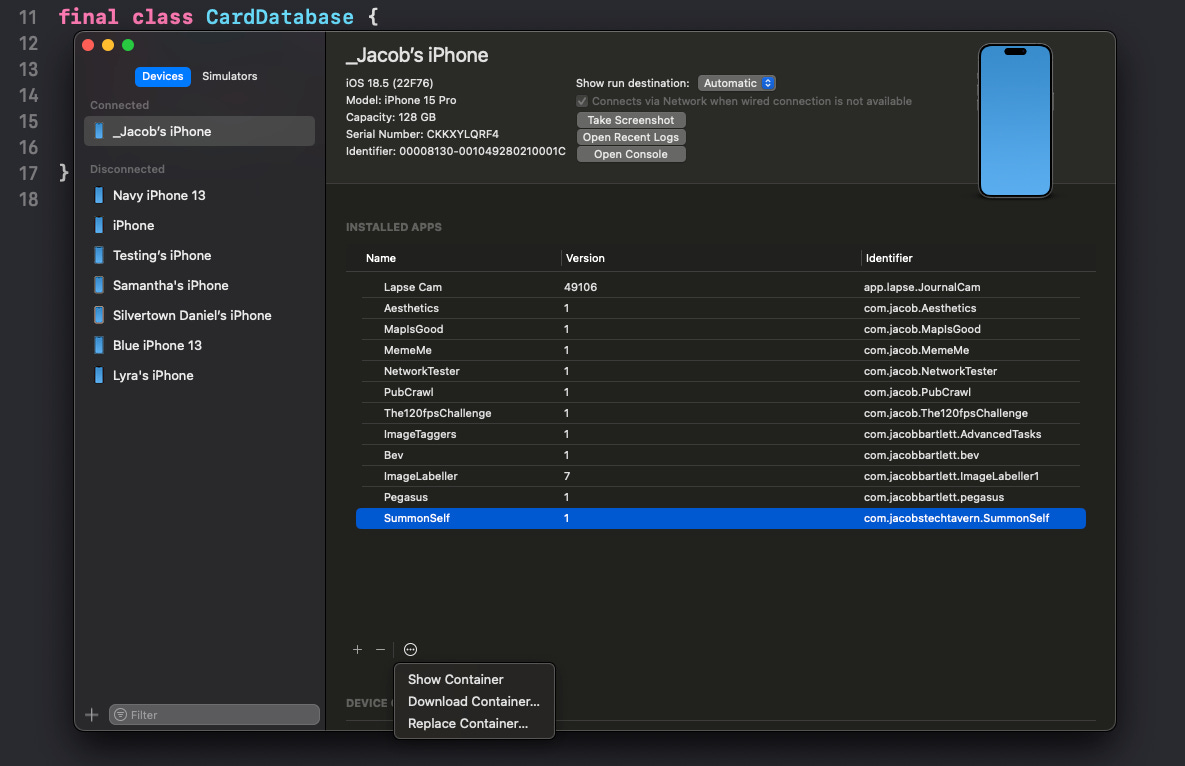

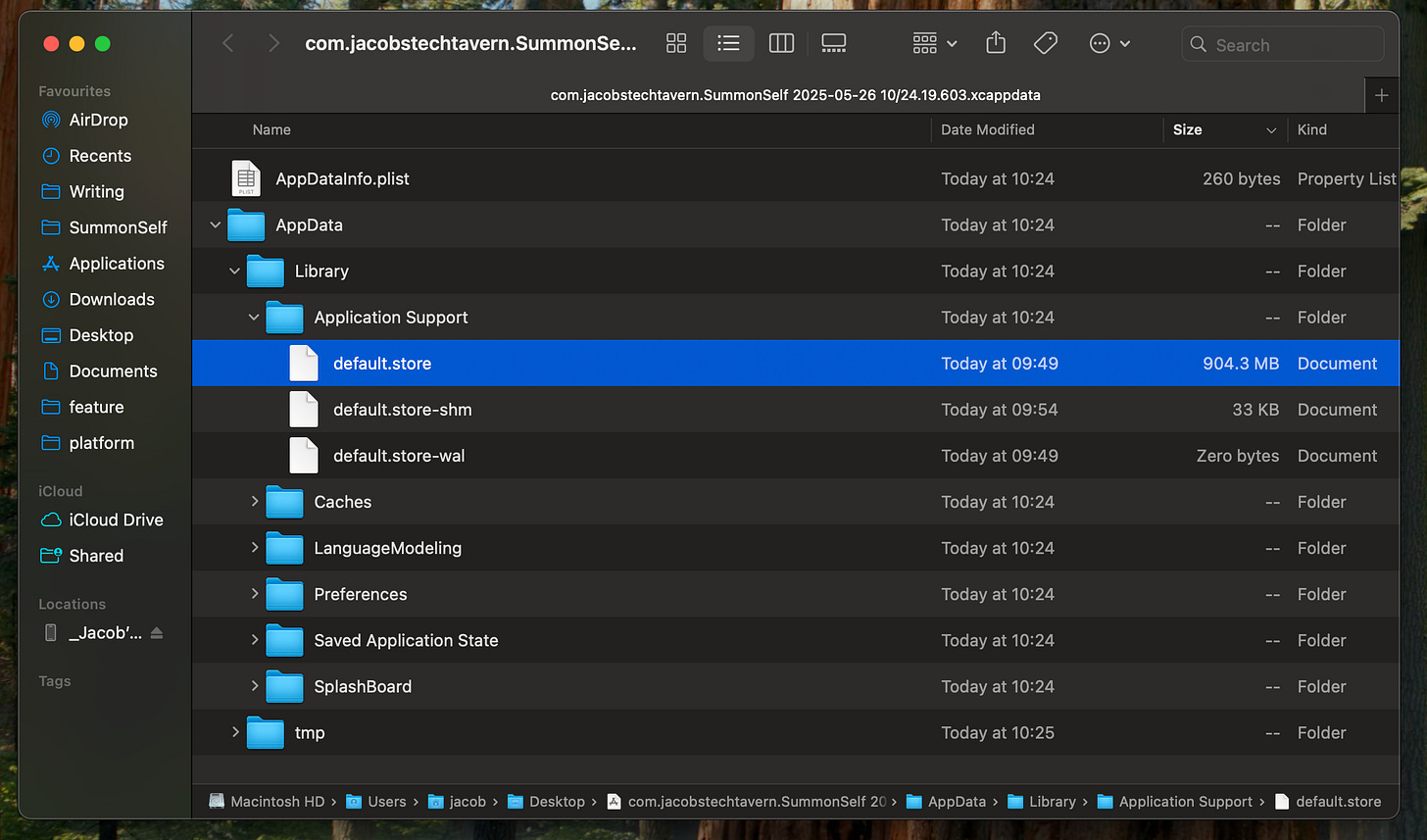

Backing up the container

I don’t want to mess this up.

I am scared to risk my beautiful comic-con snaps.

Therefore, before doing any work, I will back up my app container to my computer.

At 904.3MB, this is, to use the parlance of generation Z, a hefty heckin’ chonker. Look, I never said the MVP was optimised, okay?

If I did lose all my data, I can place this default.store into my Xcode project as a resource. I can then recover it in the app by copying it to the model file location in applicationSupportDirectory at launch.

Ok, I’m less scared now. Back to it.

Moving off the main thread

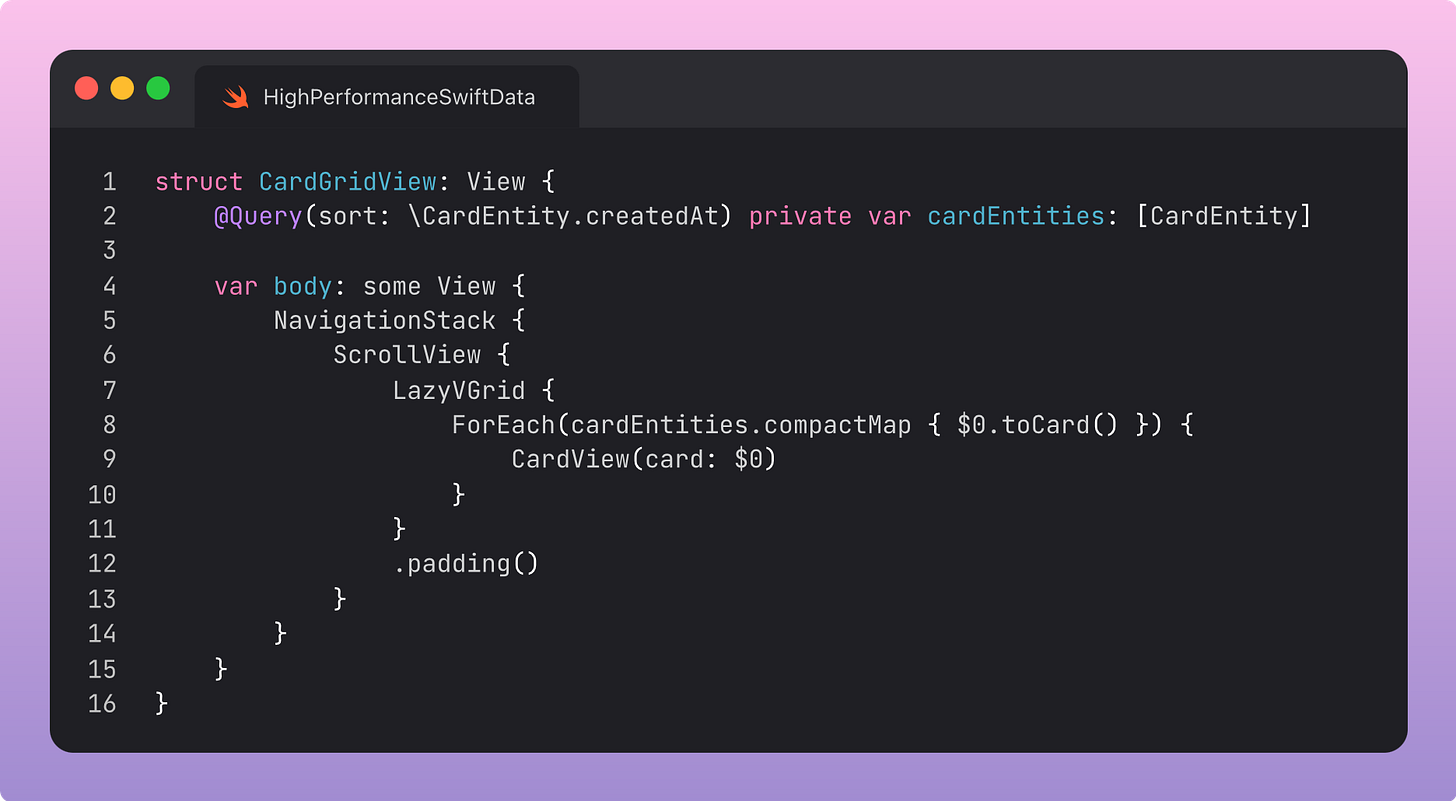

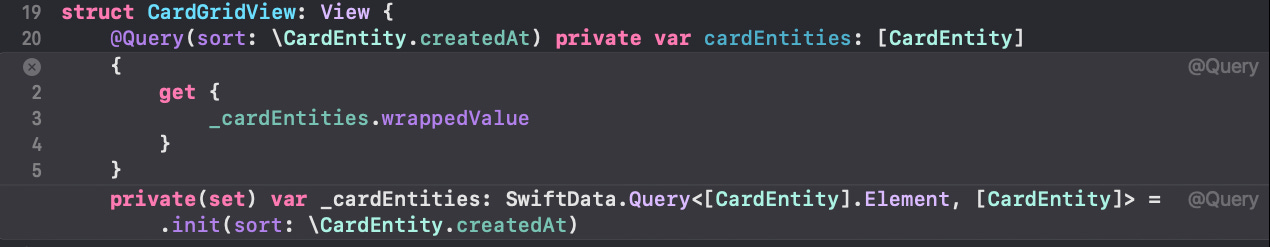

The main culprit for our hangs is an unsuspecting property wrapper that lives on our main collection view:

Uh, hold on, it’s not a property wrapper, it’s a macro. Who signed off on this syntax!?

Let’s take a look inside.

When the view appears, this query fetches the card data from SwiftData. It’s cached on the synthesised _cardEntities property.

That’s one of my big bugbears with SwiftData, frankly. It is designed by default to live on the main thread and be accessed via property wrappers, erm, I mean macros.

Fortunately, some devilishly handsome genius (no, not Antoine, I meant me) already found a way to use SwiftData outside the context of SwiftUI, unlocking the ability to move ourselves off the main thread.

How to use SwiftData outside SwiftUI

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every week.

Let’s implement this technique.

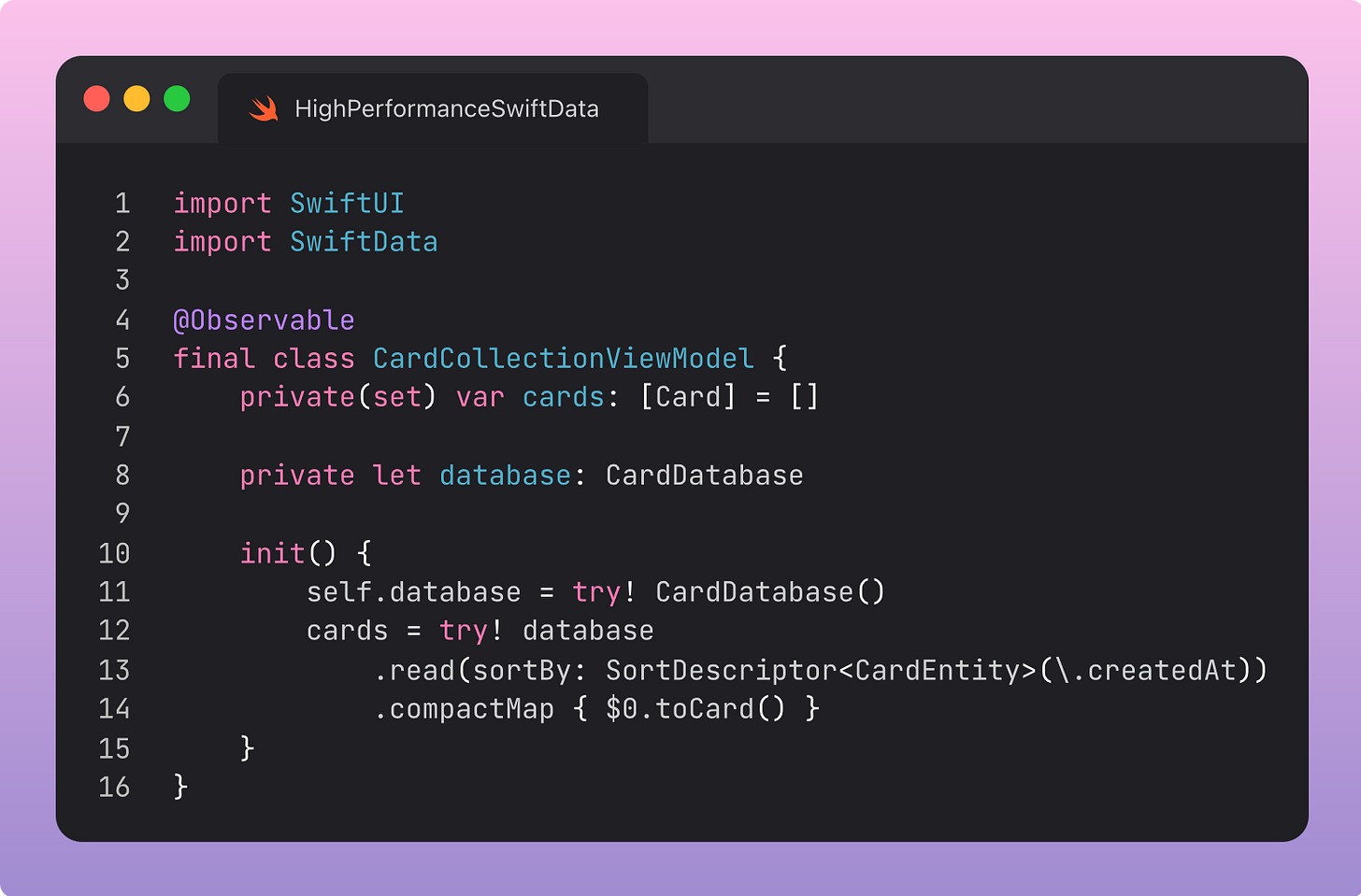

Migrating our Reads to the view model

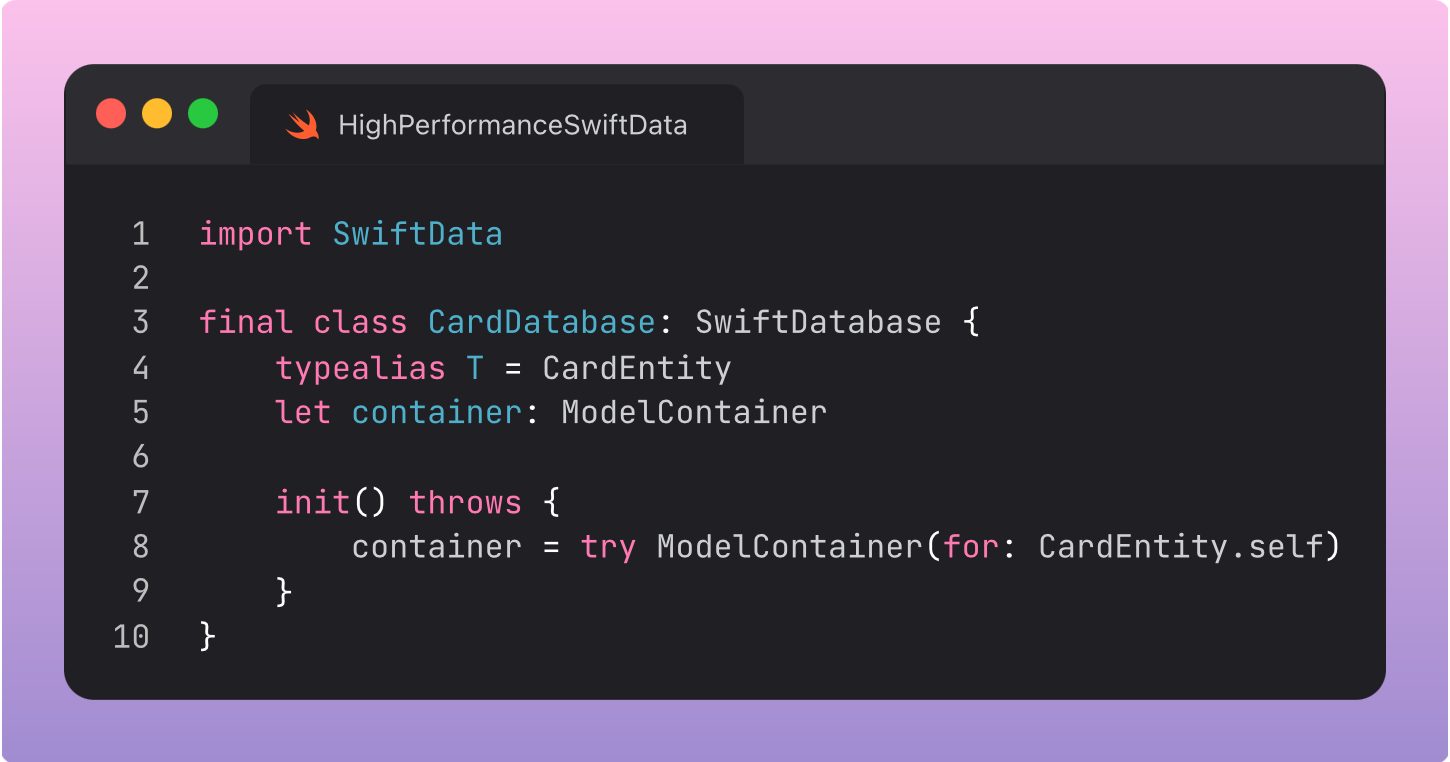

We can start by using my generic SwiftDatabase to set up a database that stores our card entities.

Now, we can just move all my cards into the view model instead of relying on the main-thread-locked @Query macro:

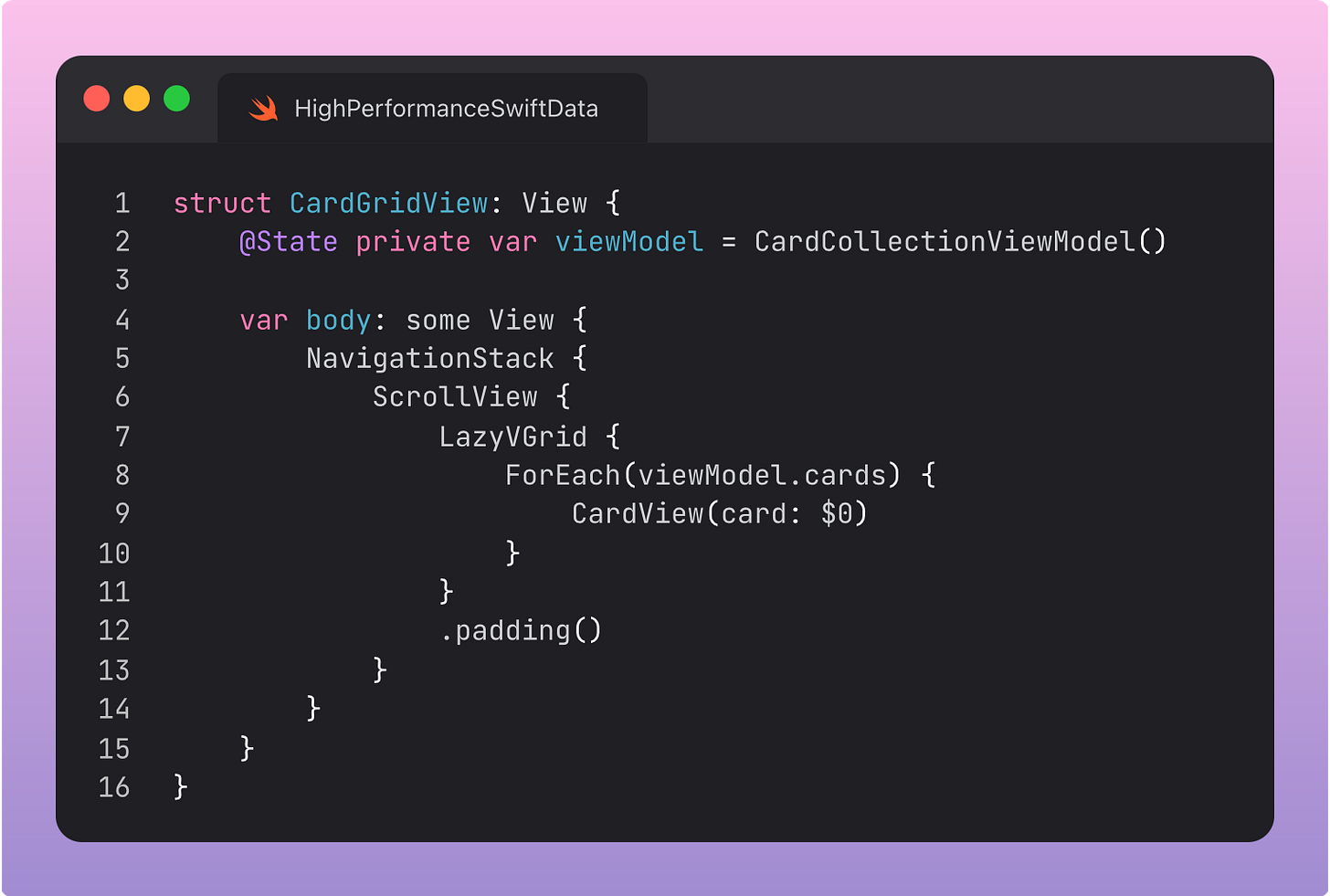

And now I can adjust my view to display these:

This tiny shift away from the SwiftUI-embedded macro into a view model gives us the ability to load everything off the main thread, and even parallelise if we want to.

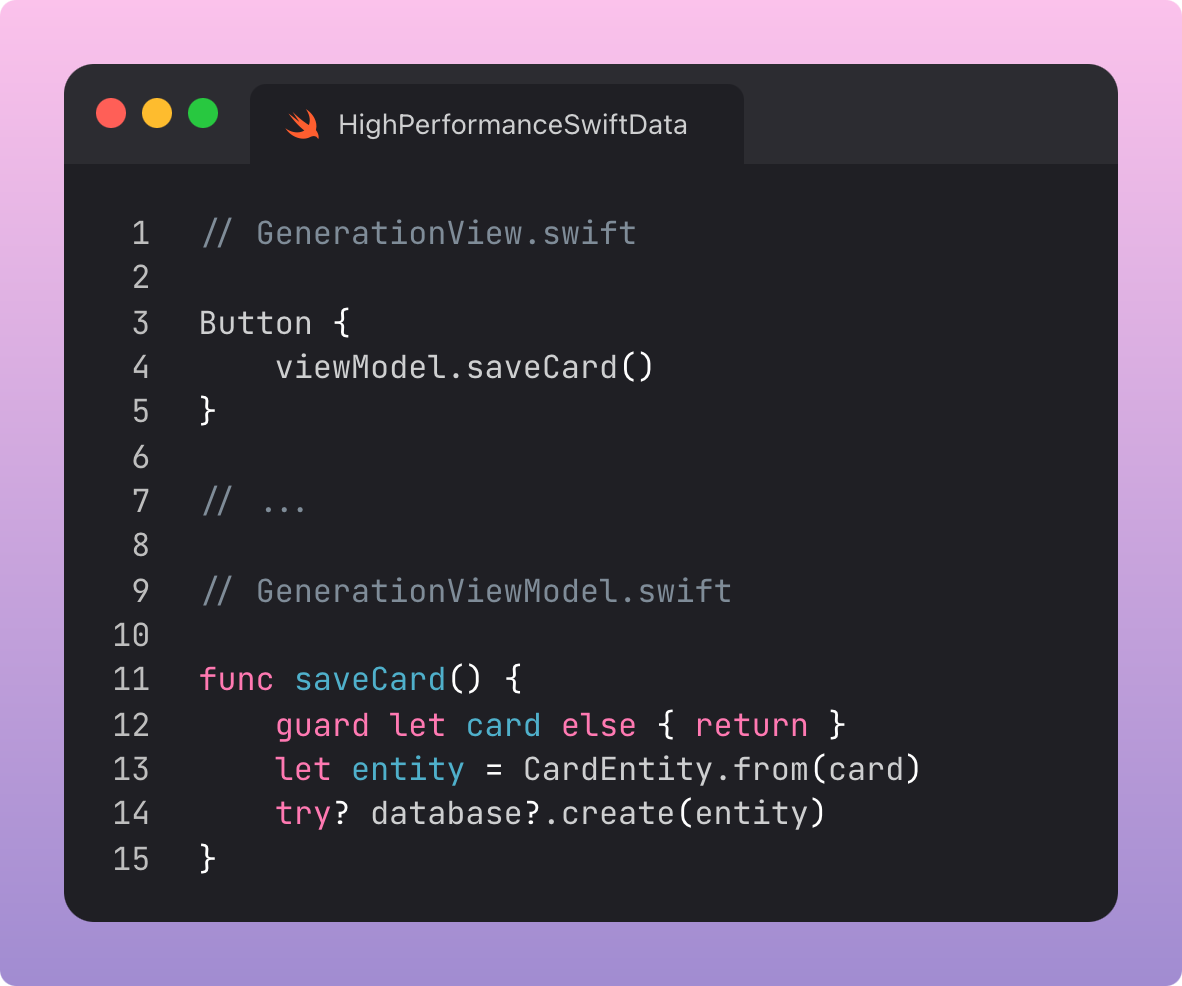

Migrating our Writes to the view model

Before we cannonball on, however, we also need to update the logic for writing a new card.

Before, the logic lives in the SwiftUI view:

After, the save logic lives in the view model:

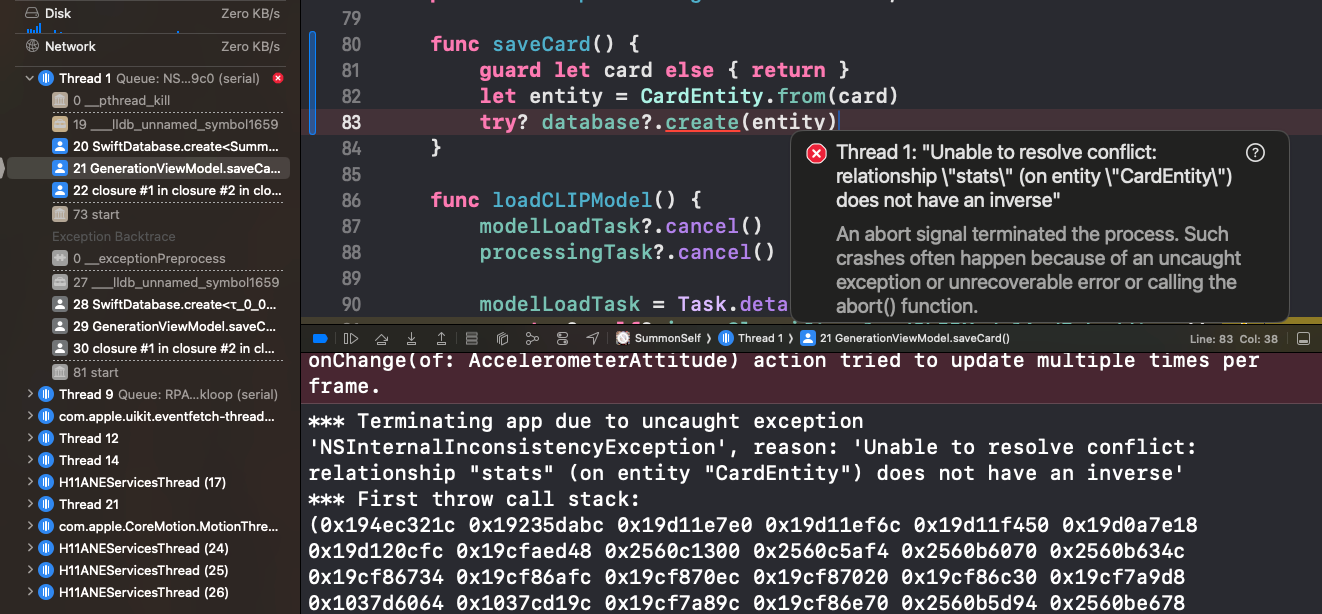

Hm, it doesn’t like this.

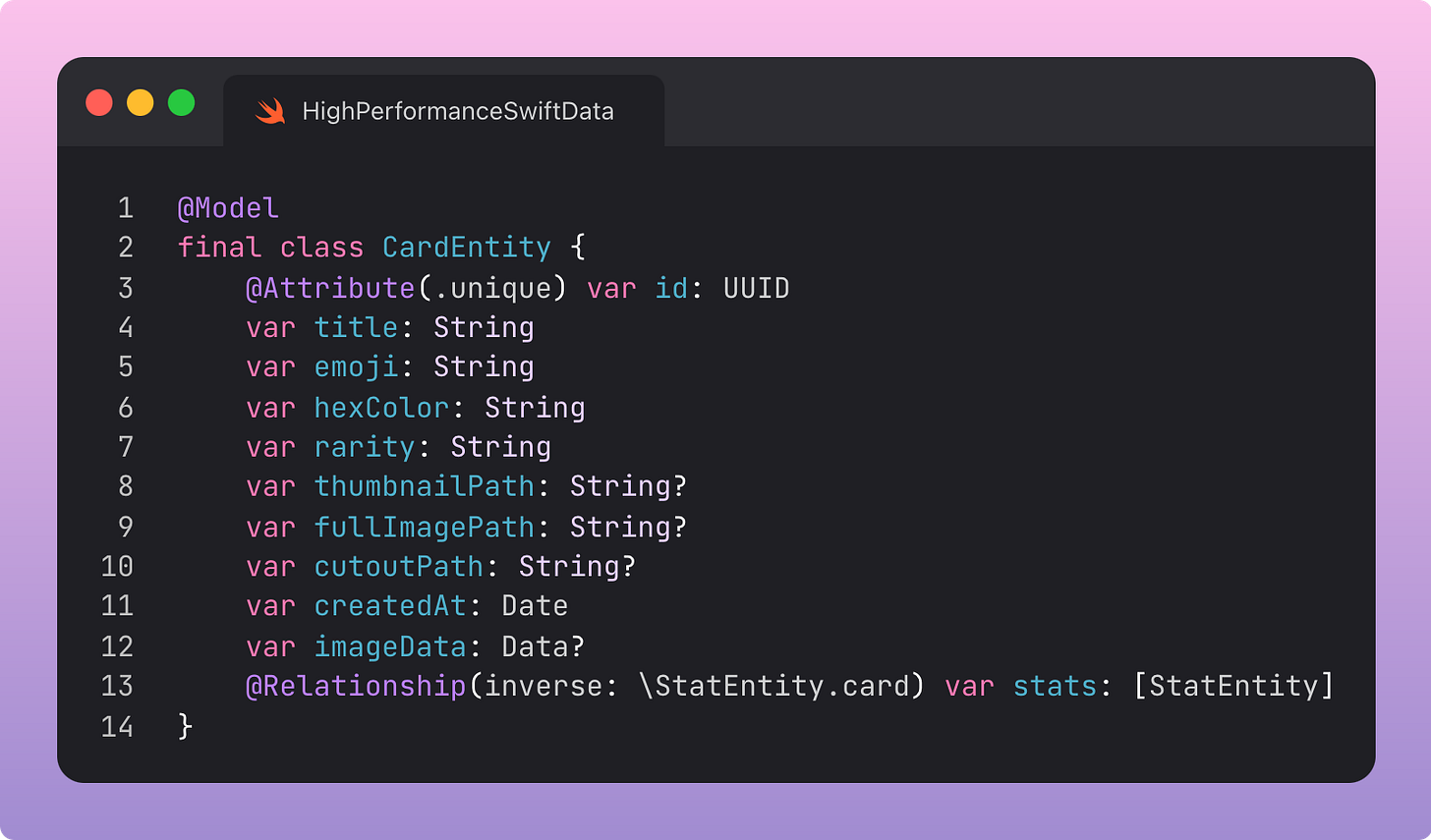

It crashes on writes, not reads, because SQLite fails to resolve the relationship between the CardEntity I’m saving and its Stats, which is a separate @Model. We can solve this by explicitly defining their relationship.

I tested this on the simulator before I risked killing my beautiful comic con card collection with a dodgy data migration. (I know I have a backup, but who wants the hassle?)

But this worked!

I didn’t even need any manual migration step, because the underlying data didn’t change; only the way SQLite interprets the relationships between the stored data. This lightweight migration is done automatically without any extra work on our part.

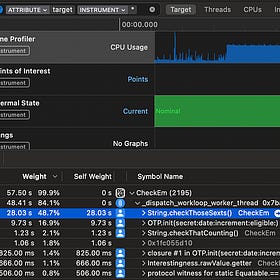

Now, let’s profile the app again.

Already, a dramatic improvement: it loads in much faster, without any hangs!

I didn’t even have to manually place anything off the main thread — it appears that by placing it in the view model, SwiftData can intelligently perform the expensive fetching and decoding off-main, and then presents it in my LazyVStack.

The time profile shows how much cleaner this small change makes our app. It still has its problems, including several hangs and CPU spikes, but our app is at least, almost, functional.

Clearly, however, there is a long way to go to optimise our memory usage.

By the memory graph and time profile conveniently signpost us to the next bottleneck: png_read_IDAT_data.

Okay, now for the good part.

Efficient Image Data Modelling

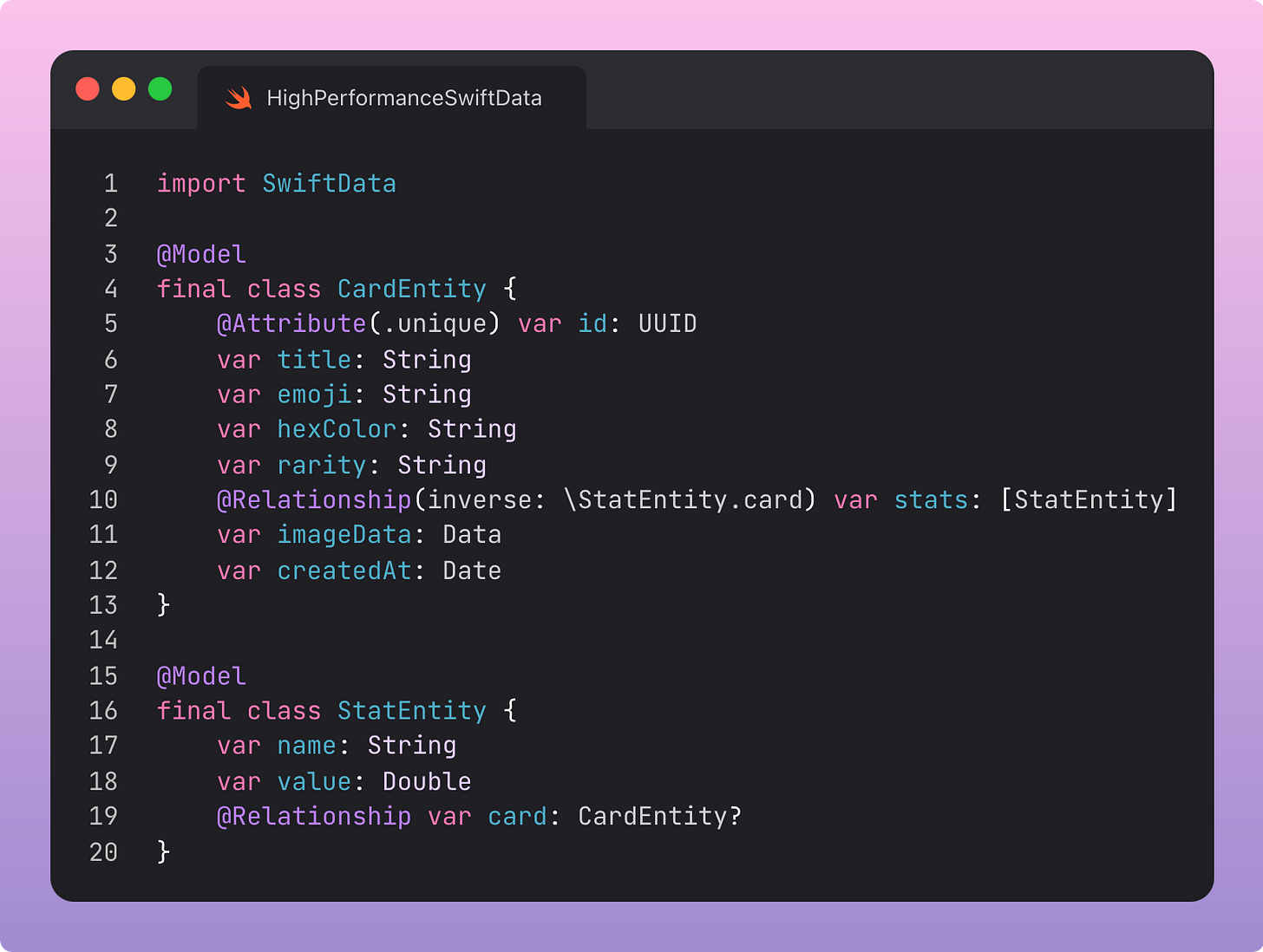

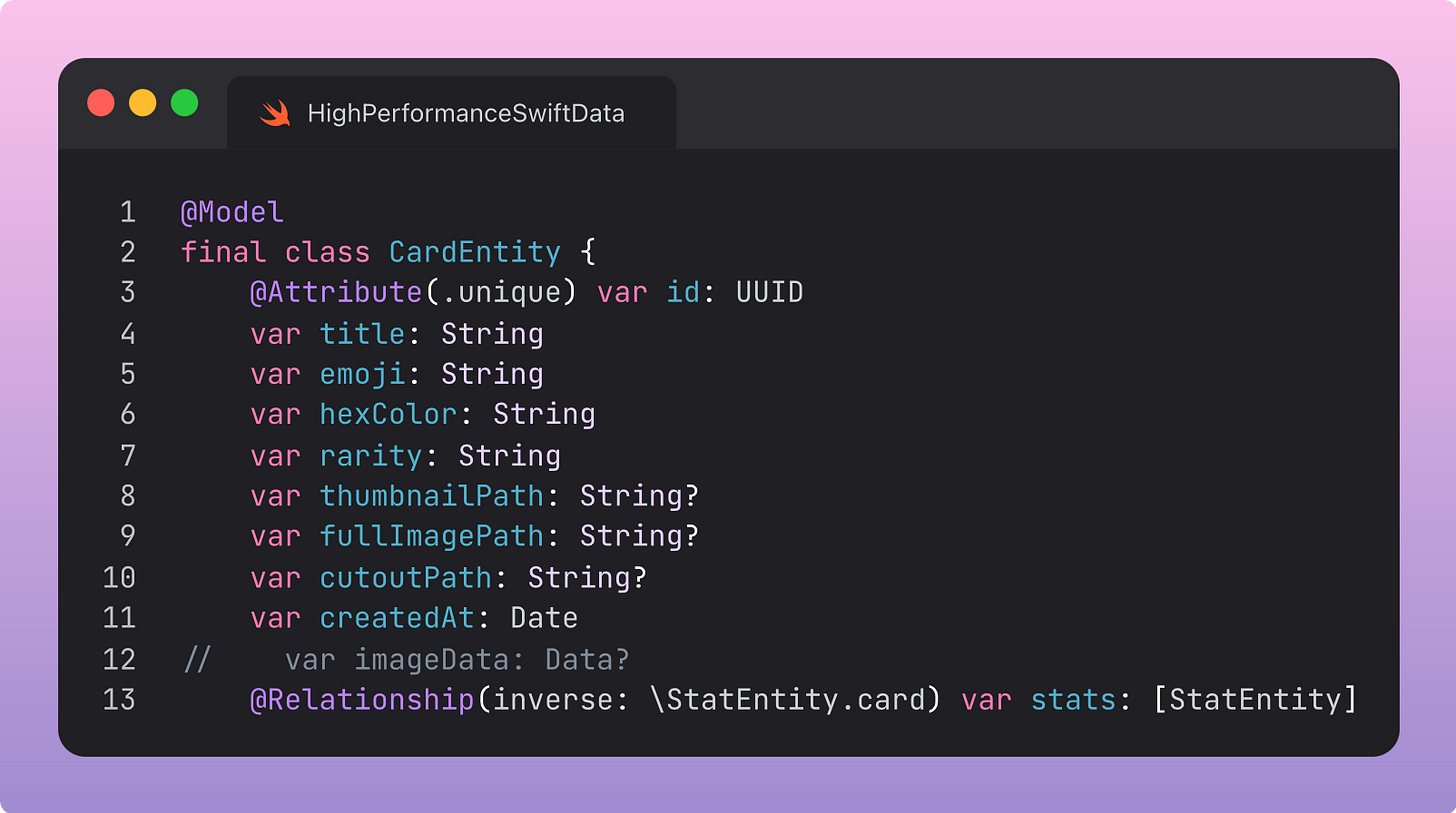

There’s a glaring, giant fault with our data model.

Image compression

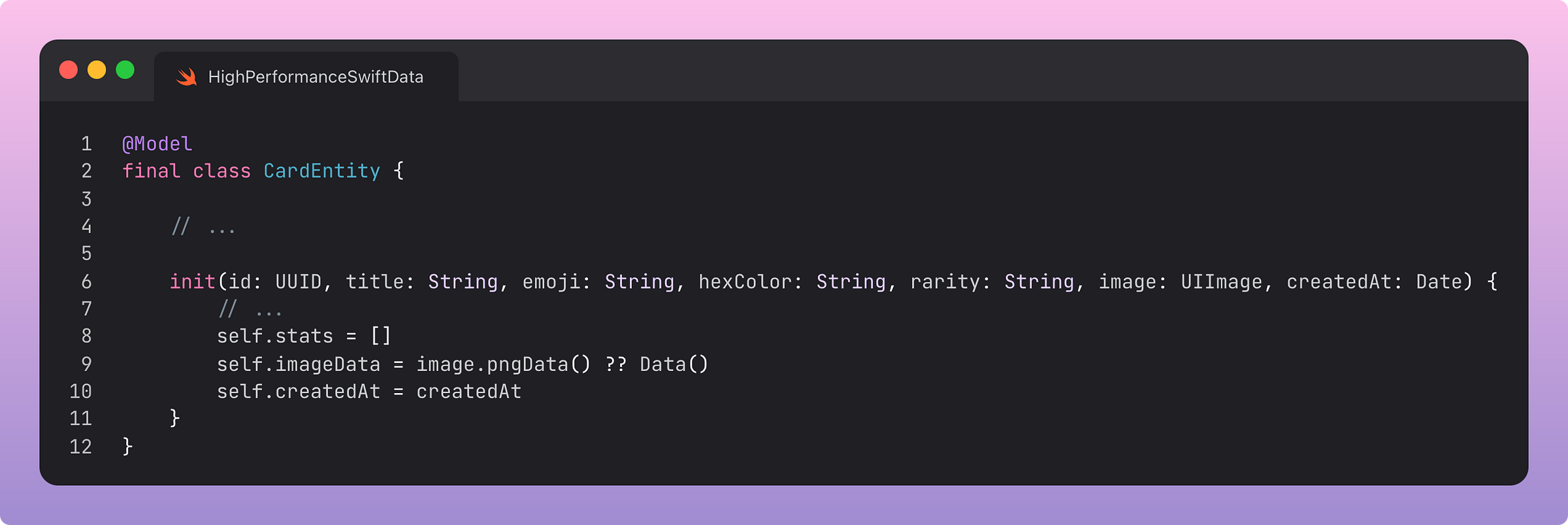

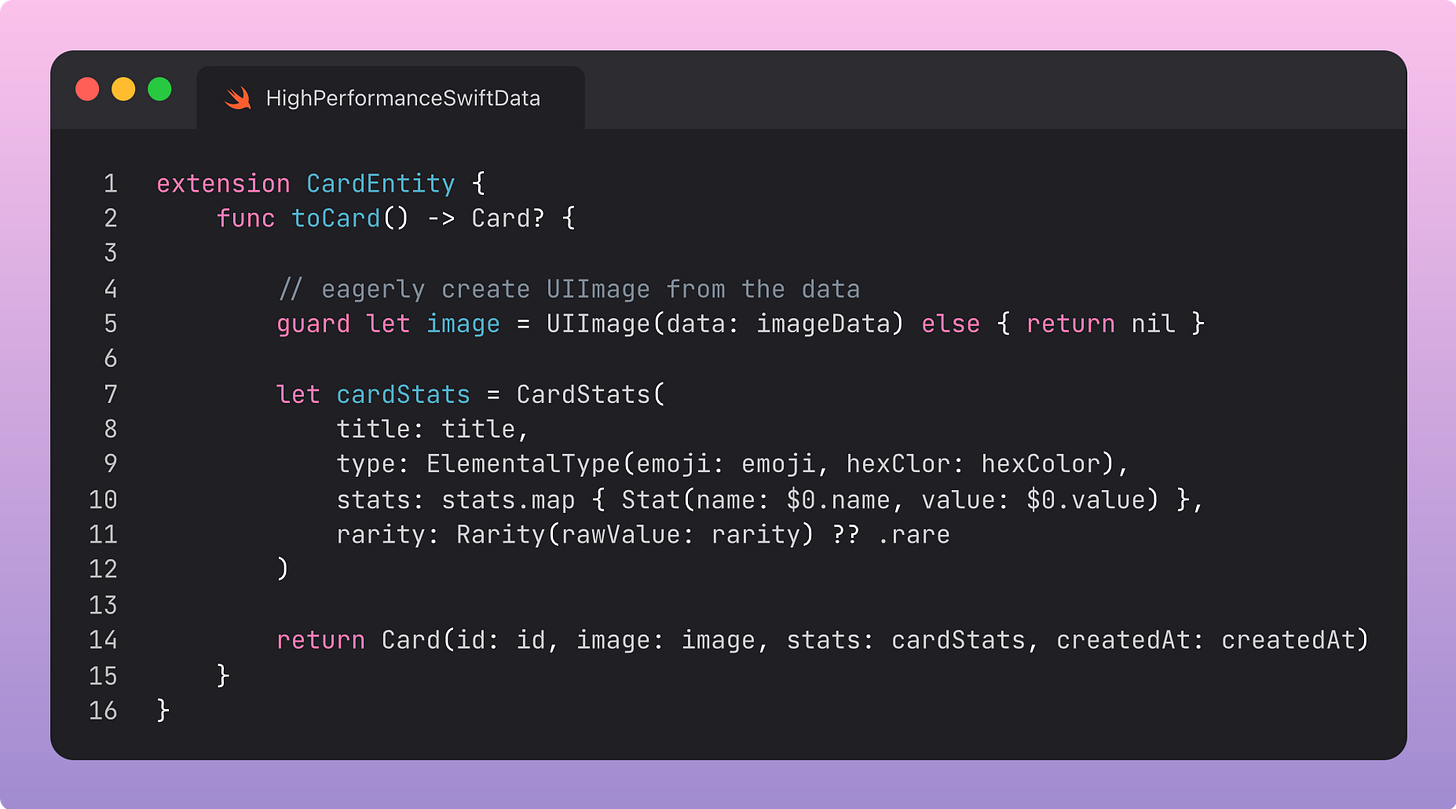

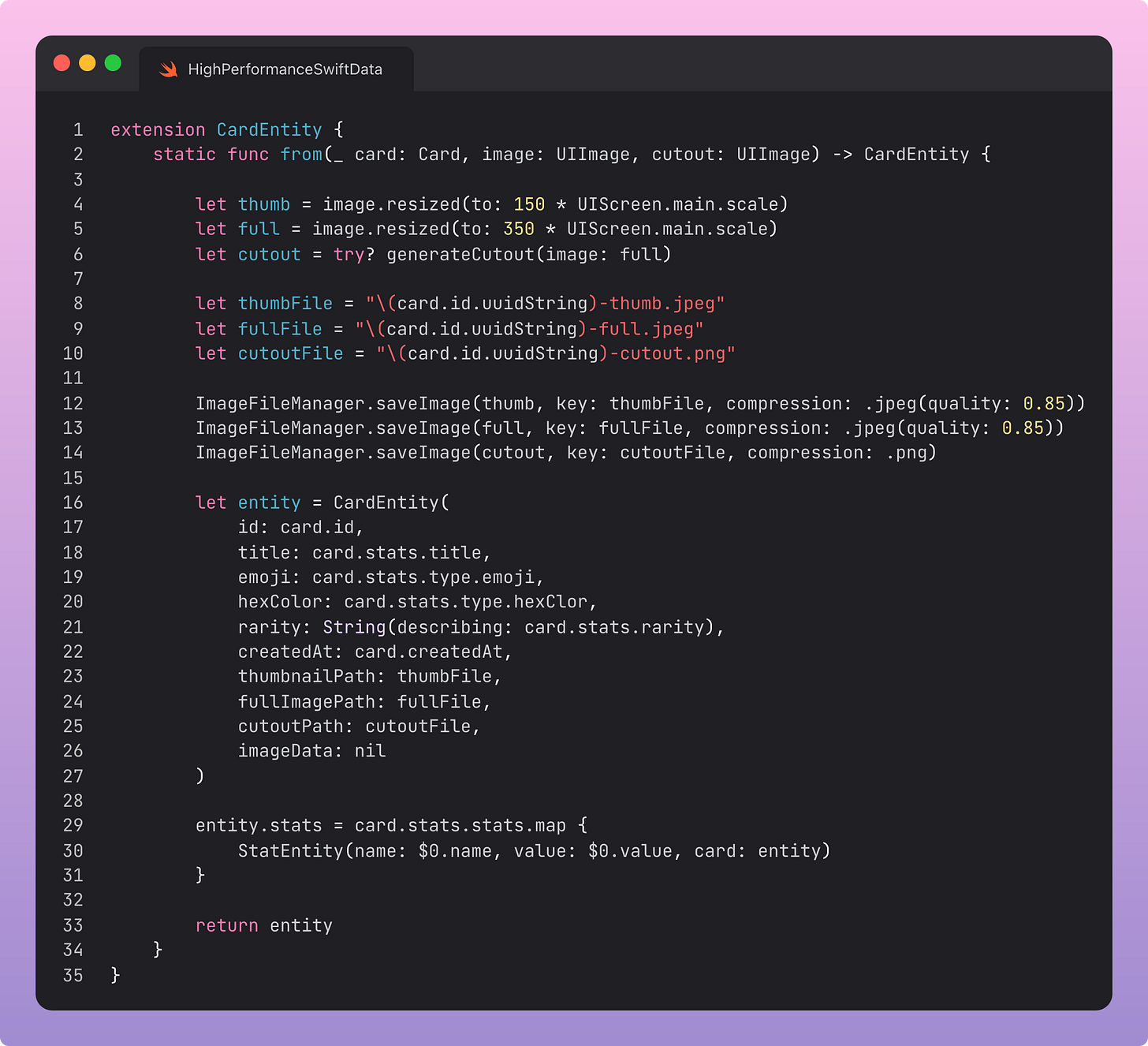

Let’s look at how we initialise our card data:

We’re saving the image PNG data to the SwiftData model directly.

PNG data felt like an appropriate place to start as I was hacking together my MVP. It’s lossless, so I had the option to move away from it in the future without losing any resolution.

But what actually is a PNG?

PNG is a lossless form of image compression. Therefore, you can encode to and from PNG ad infinitum and the image will never lose fidelity.

For my iPhone 15 Pro, that means the 48 megapixel camera is stored losslessly.

This means 48 million pixels, which could take up 4 bytes each uncompressed (for RGBA values), so 183MB each. When compressed to png, this might end up at about 50MB.

JPEG is a bit lossy, but can be 10x smaller, so it’s a great option if we don’t need to maintain the full integrity of the photo. Here, we really don’t. It’s just displayed as a thumbnail, and sometimes as a fullscreen image in the full card view.

Image Decoding

Using image.pngData() isn’t very efficient, but that’s not why I got a memory spike to multiple gigabytes.

The problem is how we converted the data into a useable UIImage.

We eagerly performed this conversion right after reading from the database. For every single card.

The other half of this memory spike lies when converting our data model to a visual model.

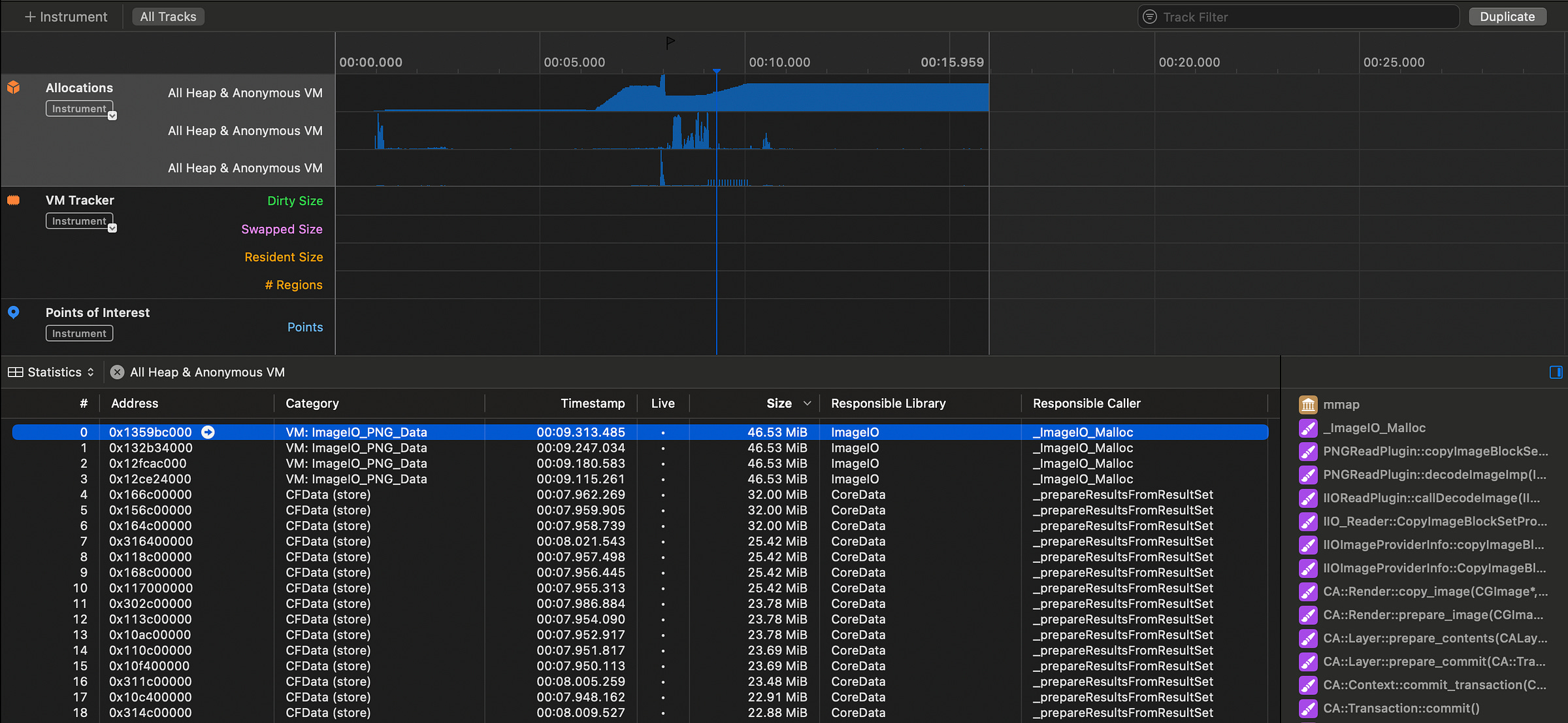

We can observe ImageIO_PNG_Data in the “Allocations” Xcode Instrument, where each inflated bitmap in the UIImages stores 46.53 MiB (~48MB). For the 42 or so images I am displaying, this rounds up nicely to the ~2GB memory spike we observed as these images are eagerly allocated.

So we have the problem.

The PNG data was decoded and inflated into a full uncompressed bitmap in the UIImage.

For every image!

That’s why the app got slower and slower every time I added an image, until it crashed. The memory footprint grew linearly with each full-resolution UIImage that I displayed as a 150pt-wide thumbnail.

Solving the Memory Spike

There are 2 solutions to this spike.

First, let’s start small. Really. We can simply use something smaller for our image data.

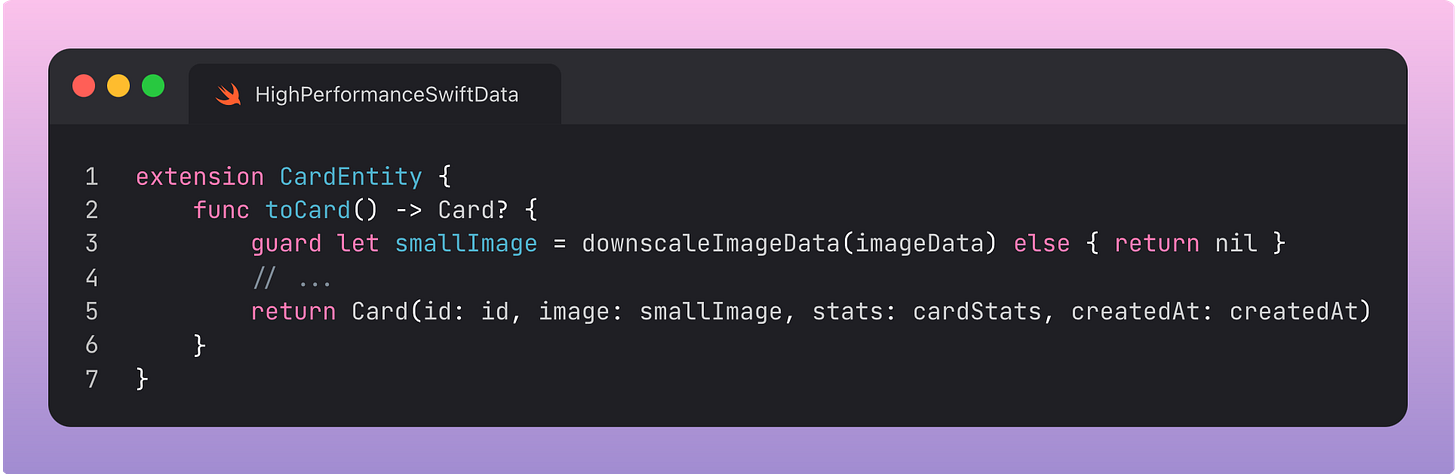

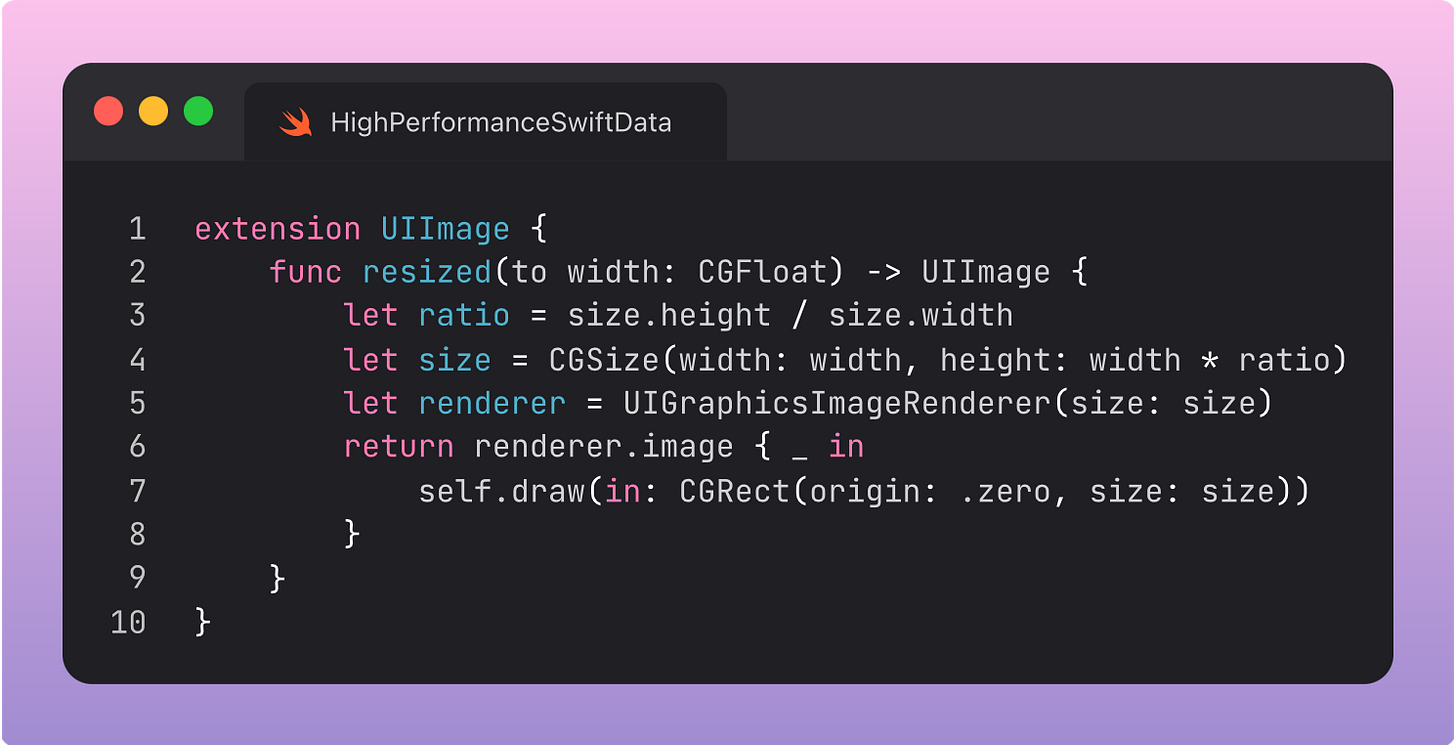

I don’t want to perform a full migration now, so I’ll test it out by downscaling the image data before we create a UIImage.

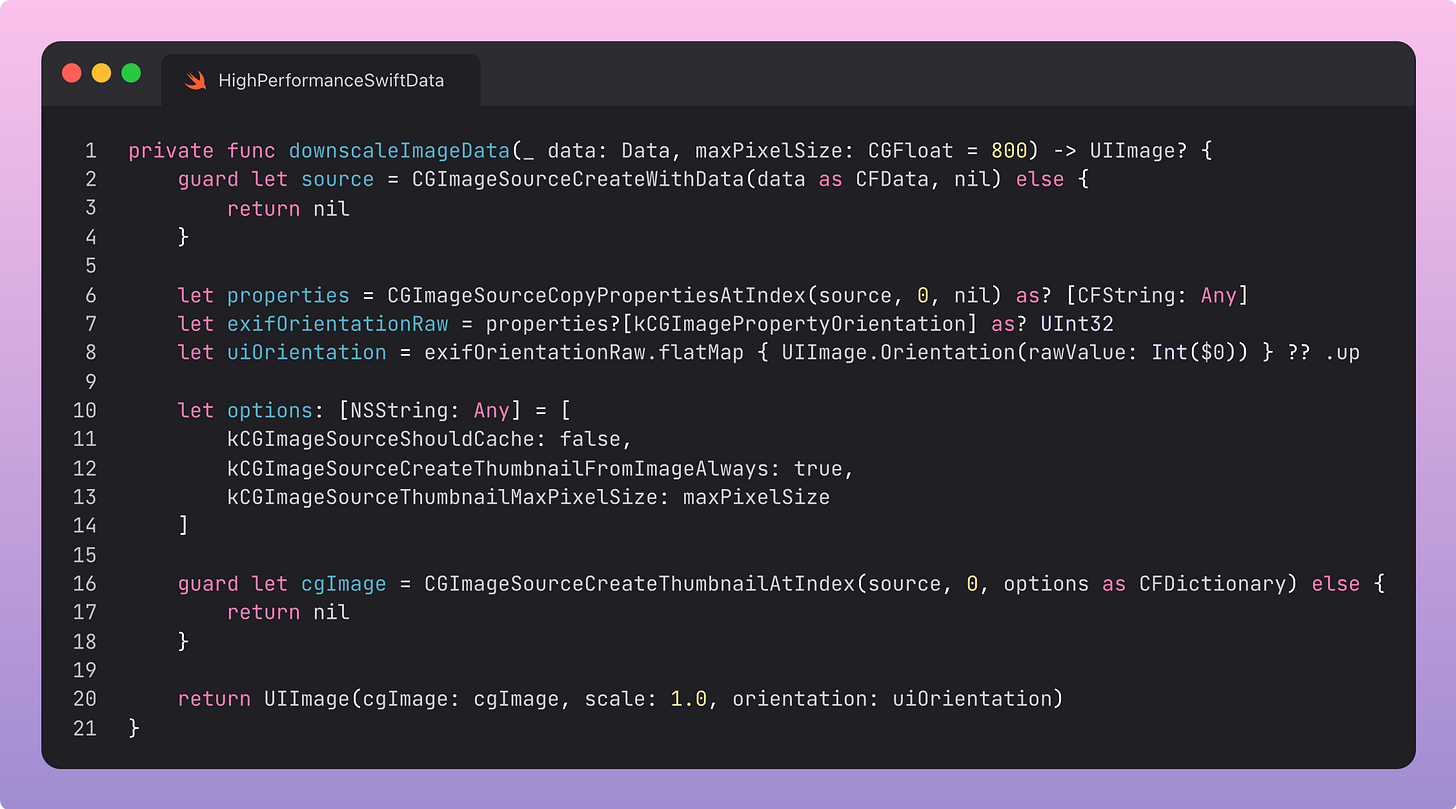

Then we downscale like so using Core Graphics:

This loads in our original view with a much gentler memory footprint, and a far better frame-rate.

This downscaling still causes a big initial memory spike, but I know this is because I am decoding these massive PNGs into our SwiftData model. If we downscaled the data on write, we are fine.

But, there’s an even more performant approach for how we should handle these images. The “proper way” to persist images on iOS, following standard system design principles.

Storing our images the “proper way”

The data model contains the relevant metadata for each card. This is optimised in SQLite for rapid retrieval.

But regardless of how we compress the images, we are stuck with a big blob of binary data. A relational SQLite database (that SwiftData uses under the hood) is a terrible fit for storing binary data like this. We should be using the filesystem.

Now I have my plan.

First, I want to save 3 file-path references. These can live on my SwiftData model.

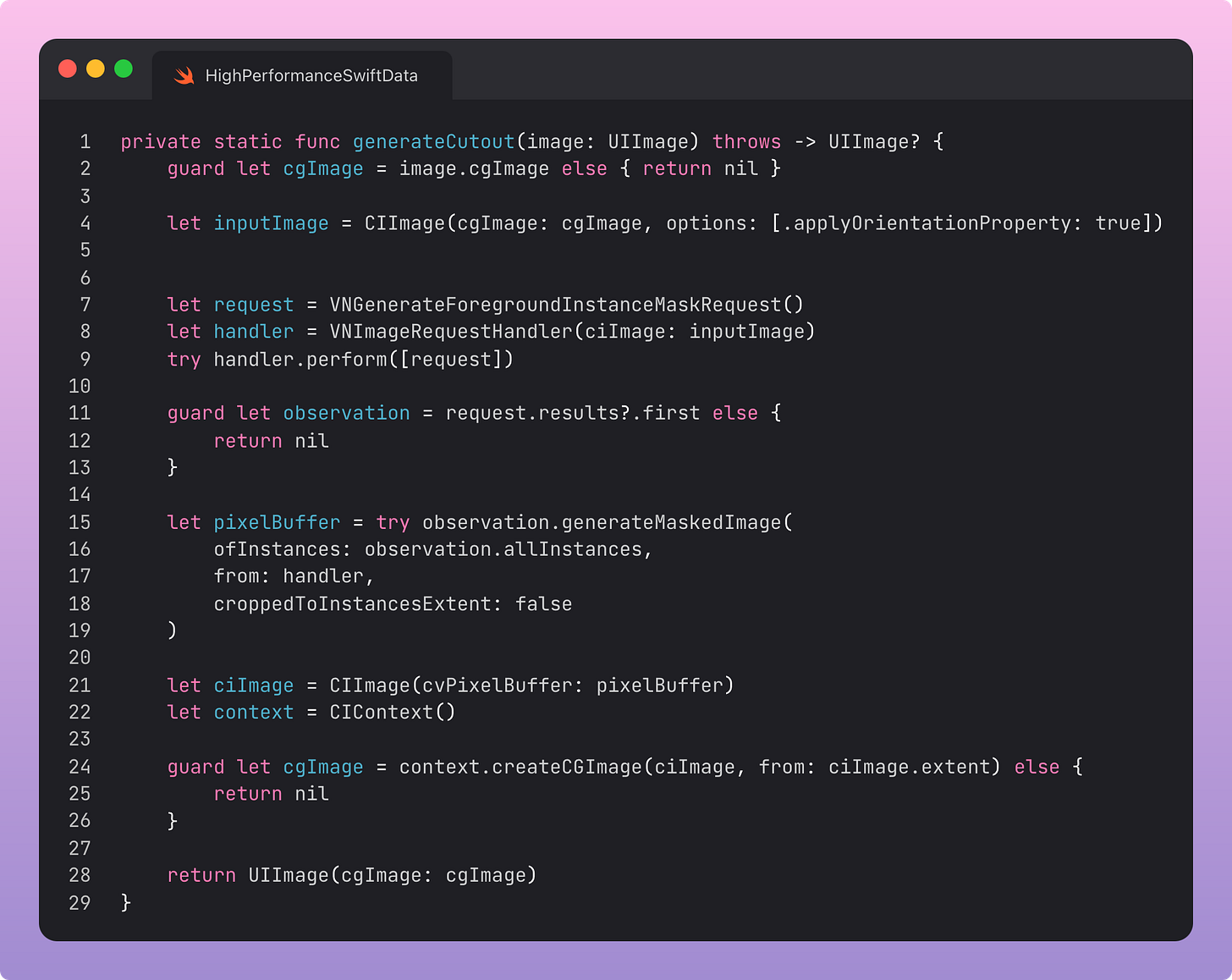

I want to process each image into a downscaled JPEG thumbnail (~150pt wide at a 3x scale) and a full-size image (350pt wide at 3x) on save, plus a separate cutout image to overlay on my card effect using the Vision Framework’s VNGenerateForegroundInstanceMaskRequest.

But, to keep hold of my existing data, I can easily migrate my existing data when initialising my database.

For now we’ll just semi-deprecate the old, inefficient imageData property, convert it to an optional, then use it to migrate my own data across before removing it entirely.

The resized(to:) here is just a helper extension on UIImage:

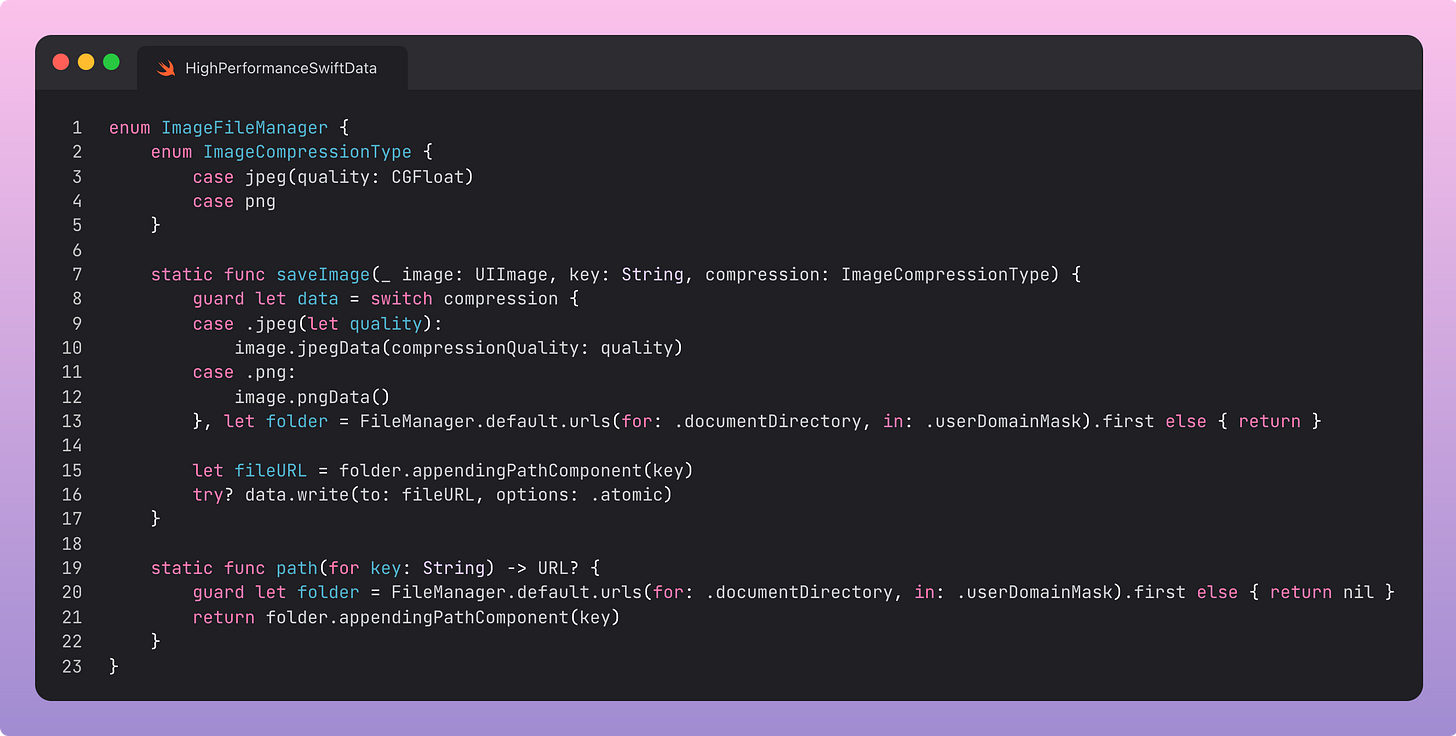

And ImageFileManager is a little utility that looks after my file-management boilerplate.

I’m giving myself the option of using PNG compression here, because PNG has an alpha channel (unlike JPEG). This is important because the cutout images have a lot of transparency!

Let’s run the data migration now!

Oh dear, it crashed again. Out of memory. Again.

Hold on, I’m forgetting something:

With autoreleasepool to drop the heavy inflated UIImage bitmaps with each loop pass, the migration runs smoothly:

After that rollercoaster ride, we are fully migrated!

If you want a primer on autoreleasepool, and other obscure but powerful Swift invocations, take a look at this forbidden banger of an article:

The Swift Apple doesn't want you to know

I’ve been writing Swift daily for nearly 10 years. I’m impostor syndrome-proof. I’ve seen it all. But every so often… I don’t know.

Maybe I make a typo in Xcode that unearths forgotten creatures from the Mariana Trench of autocomplete. Perhaps I’m buying pet chimps on the darkweb and accidentally stumble upon a blog post containing arcane syntax.

The heavy image data now lives at individual downscaled file paths for each context.

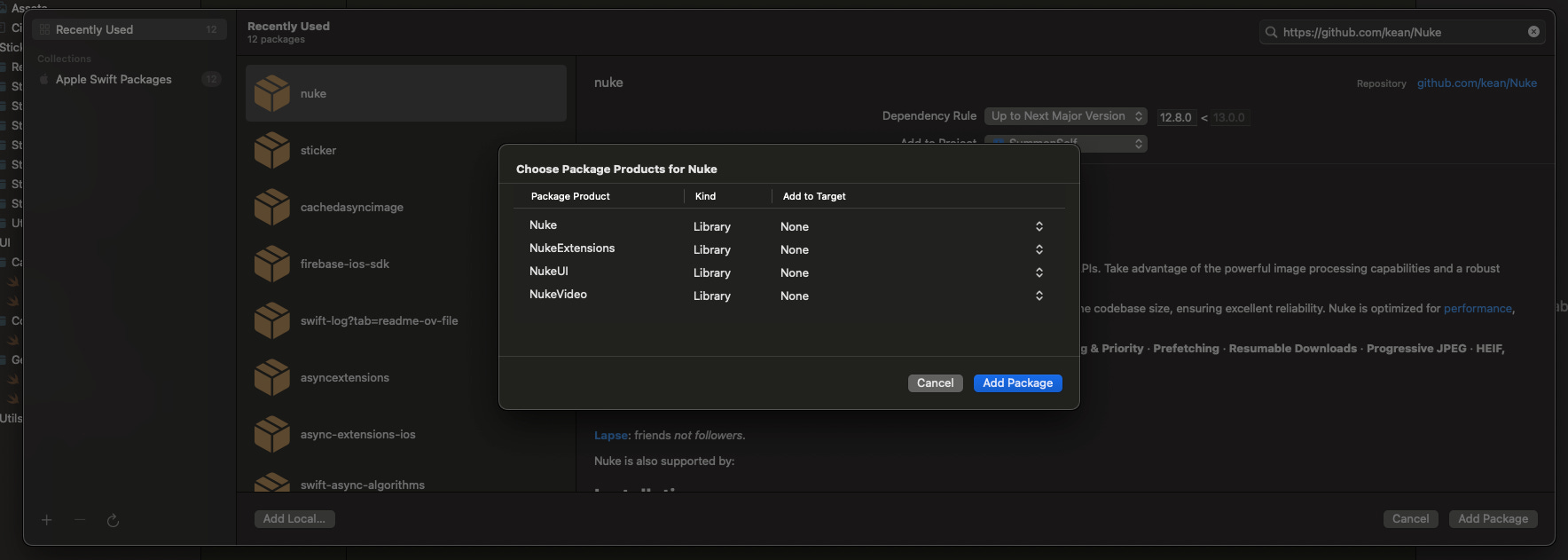

To fetch and display them, I’ll invoke Nuke, our friendly neighbourhood image caching library.

Let’s get it working using their LazyImage view.

Now we can just copy over the migration code, so we can convert newly generated cards into a lightweight data object that only stores file paths to the image data.

Now that we have the cutouts stored, we also avoid performing the relatively heavy Vision framework VNGenerateForegroundInstanceMaskRequest computation every time we open at a card.

It now works great!

I finally feel confident enough to kill the original image data.

Now let’s look at one final profile.

Still pretty heavy because a lot of image processing is still happening, but nothing a modern iPhone can’t handle, even on low-power mode.

The time profile is utterly, butterly, smooth.

Last Orders

I’ve succeeded on both counts.

I’ve identified and fixed the SwiftData performance bottlenecks.

I haven’t lost a single card I collected at Comic-Con.

The app is now production ready. My collection is safe.

I learned a lot about optimising SwiftData here, but this approach can generalise to any kind of data you store in your app. Storing metadata via a persistence framework, and keeping filesystem references to any large binary data, is a timeless system design technique.

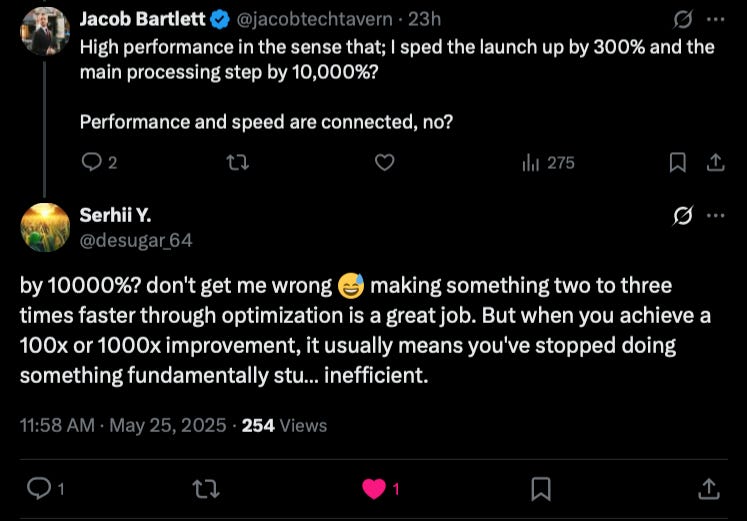

This Tweet probably sums up my experience best.

He’s absolutely right. I was probably doing something fundamentally stupid in my original MVP. I put 5MB raw PNGs into a SwiftData model with no compression. I attempted to fetch all of these at once on the main thread into even heavier UIImages, at full size.

But on the other hand, I was hacking together a prototype using the default tools Apple provides you. There’s no compiler warning saying, “hey buddy, you might want to put that giant image in the filesystem”.

Even worse, it’s very unclear how to place your SwiftData fetching off the main thread. By default, you are encouraged to do the janky approach using thread-blocking SwiftUI @Query macros. Just the act of using a view model fixed the worst of the hangs.

I hope you learned a little, and had fun today. If you’re curious about the app, download Summon Self on the App Store today!

Here’s the original write-up:

I trapped your soul in a trading card (with client-side AI)

This is my most elaborate article yet, covering my full journey building my new indie project, Summon Self, from conception to initial release.

And if you’re on a performance kick, my previous stint on app performance optimisation is a doozy:

High Performance Swift Apps

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every two weeks.

Thanks for reading Jacob’s Tech Tavern! 🍺