The Swift Runtime: Your Silent Partner

Fun and games with the Swift source code

The Swift Runtime, a.k.a

libswiftCore, is a C++ library that runs alongside Swift programs to facilitates core language features. I set out to understand what we mean by “runs alongside” and “facilitates core language features”.

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every week.

Full subscribers unlock Quick Hacks, my advanced tips series, and enjoy my long-form articles 3 weeks before anyone else.

The Swift Runtime, a.k.a libswiftCore, is a C++ library that runs alongside all Swift programs. The runtime library is dynamically linked to your app binary at launch and facilitates core language features such as:

That is the standard elevator pitch, but there’s just one problem: What the h*ck do I mean by “runs alongside” and “facilitates core language features”!?

Let’s find out with a quest into the Swift source code.

Here there be dragons (well, wyverns). Many a brave explorer has been lost, or lost their mind, trying to comprehend the labyrinthine code paths of its forbidden texts (more commonly referred to as C++).

To keep us tethered to reality, we will start with some familiar Swift code you’ll know and love. I’ll demonstrate a core Swift language feature in the most basic way possible.

We’ll convert this into Swift Intermediate Language, and follow how these instructions compile all the way down to calls into the Swift Runtime ABI. This will ultimately show exactly how the runtime program runs alongside your own code.

Finally, we will look inside libswiftCore — the runtime library code itself — to understand how core language features are implemented.

Memory Management

The Swift runtime is in charge of allocating blocks of memory, cleaning it up, and everything in-between.

It captures memory from the heap, tracks the reference counts of your objects, creates side tables to store weak references, and kills your app if you mess up with unowned references.

There are few really important files relating to handling memory in the Swift runtime code.

This isn’t exhaustive — SwiftObject.mm, RefCount.h, and WeakReference.h are also vital pieces of the runtime memory puzzle. But we can get an understanding of how the runtime system works just by analysing a single component in detail: memory allocation.

Let’s narrow our weave and start with 2 lines of code.

Your Swift Code

We’ll begin with a dead-simple Swift program.

class Memory { }

let mem = Memory() We’re defining a class, then instantiating an instance.

If the going gets tough down below, just remember these 2 lines of Swift.

Swift Intermediate Language

The first few steps of the compiler convert our code into Swift Intermediate Language, a special syntax which allows the compiler to perform optimisations on the code. We can convert our main.swift file into optimised SIL using this command:

swiftc -emit-sil -O main.swift > sil.txtThis generates a hefty 87 lines of SIL, but we can find what we’re looking for in the explicitly-generated main() function:

// main

sil @main : $@convention(c) (Int32, UnsafeMutablePointer<Optional<UnsafeMutablePointer<Int8>>>) -> Int32 {

[%1: noescape **]

[global: read,write,copy,allocate,deinit_barrier]

bb0(%0 : $Int32, %1 : $UnsafeMutablePointer<Optional<UnsafeMutablePointer<Int8>>>):

alloc_global @$s4main3memAA6MemoryCvp // id: %2

%3 = global_addr @$s4main3memAA6MemoryCvp : $*Memory // user: %6

%4 = alloc_ref $Memory // users: %6, %5

debug_value %4 : $Memory, let, name "self", argno 1, implicit // id: %5

store %4 to %3 : $*Memory // id: %6

%7 = integer_literal $Builtin.Int32, 0 // user: %8

%8 = struct $Int32 (%7 : $Builtin.Int32) // user: %9

return %8 : $Int32 // id: %9

} // end sil function 'main'Let’s study the following line in more detail:

%4 = alloc_ref $Memoryalloc_ref is the SIL instruction for allocating the memory required to create an instance of our Memory class.

The Swift Compiler

When pulling instructions down from the Swift compiler’s front-end into the guts of the LLVM toolchain, the instructions are visited to prepare them for conversion to LLVM Intermediate Representation.

This is simply a design pattern for traversing each SIL instruction. They are individually compiled down into the representation required for the next stage of the compiler. The functions that implement this visitor pattern follow the format:

visit[SIL-instruction-name]Inst

This pattern is applied in each function call.

Understanding these patterns and naming conventions is paramount to learning to traverse the Swift source code yourself.

In IRGenSIL.cpp, the IRGenSILFunction::visitAllocRefInst function translates the alloc_ref instruction into LLVM IR.

void IRGenSILFunction::visitAllocRefInst(swift::AllocRefInst *i) {

int StackAllocSize = -1;

if (i->canAllocOnStack()) {

estimateStackSize();

// Is there enough space for stack allocation?

StackAllocSize = IGM.IRGen.Opts.StackPromotionSizeLimit - EstimatedStackSize;

}

SmallVector<std::pair<SILType, llvm::Value *>, 4> TailArrays;

buildTailArrays(*this, TailArrays, i);

llvm::Value *alloced = emitClassAllocation(*this, i->getType(), i->isObjC(), i->isBare(),

StackAllocSize, TailArrays);

if (StackAllocSize >= 0) {

// Remember that this alloc_ref allocates the object on the stack.

StackAllocs.insert(i);

EstimatedStackSize += StackAllocSize;

}

Explosion e;

e.add(alloced);

setLoweredExplosion(i, e);

}i->getType() fetches the type metadata used with the alloc_ref instruction, which returns the $Memory class from the SIL.

The emitClassAllocation function leads to GenClass.cpp:

llvm::Value *irgen::emitClassAllocation(IRGenFunction &IGF, SILType selfType,

bool objc, bool isBare,

int &StackAllocSize,

TailArraysRef TailArrays) {

auto &classTI = IGF.getTypeInfo(selfType).as<ClassTypeInfo>();

auto classType = selfType.getASTType();

// (a lot of) code emitted for brevity ...

std::tie(size, alignMask)

= appendSizeForTailAllocatedArrays(IGF, size, alignMask, TailArrays);

val = IGF.emitAllocObjectCall(metadata, size, alignMask, "reference.new");

StackAllocSize = -1;

}

return IGF.Builder.CreateBitCast(val, destType);

}IGF.emitAllocObjectCall takes us close to our final prize in IRGenFunction.cpp:

/// Emit a heap allocation.

llvm::Value *IRGenFunction::emitAllocObjectCall(llvm::Value *metadata,

llvm::Value *size,

llvm::Value *alignMask,

const llvm::Twine &name) {

// For now, all we have is swift_allocObject.

return emitAllocatingCall(*this, IGM.getAllocObjectFunctionPointer(),

{metadata, size, alignMask}, name);

}

static llvm::Value *emitAllocatingCall(IRGenFunction &IGF, FunctionPointer fn,

ArrayRef<llvm::Value *> args,

const llvm::Twine &name) {

auto allocAttrs = IGF.IGM.getAllocAttrs();

llvm::CallInst *call =

IGF.Builder.CreateCall(fn, llvm::ArrayRef(args.begin(), args.size()));

call->setAttributes(allocAttrs);

return call;

}emitAllocatingCall demonstrates the actual function call being emitted as an LLVM instruction. But the piece we are really interested in is one of its arguments:

IGM.getAllocObjectFunctionPointer()When fully compiled into machine code, this resolves as a pointer to the runtime ABI memory address of swift_allocObject.

We can apply the consistent compiler function naming format to track down any runtime ABI instruction we like in the compiler code IRGen system, and perform the same analysis:

get[runtime-abi-instruction]FunctionPointer

The Swift Runtime ABI

The Swift Runtime ABI, or Application Binary Interface, is the API contract, or protocol definition, for the Swift Runtime.

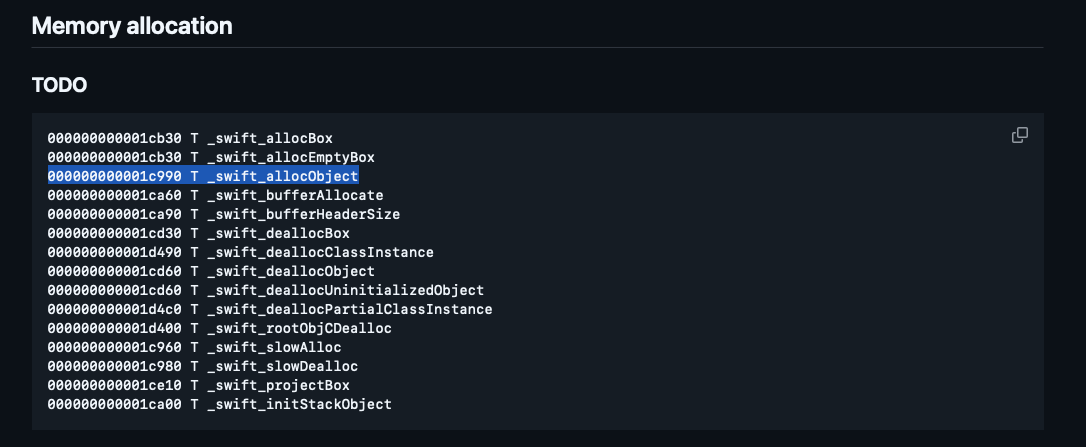

We can read this contract in Runtime.md — it’s an unassuming list of memory addresses and function names.

The pre-compiled binary for libswiftCore is dynamically linked to your Swift executable at launch. This contract lists the memory addresses of the public functions it contains.

getAllocObjectFunctionPointer() does exactly that: it returns a pointer to the memory location of the swift_allocObject function.

Thanks to the runtime contract, the compiler knows that it can rely on the swift_allocObject function being at the address 0x1c990 in the runtime library, whenever it’s needed in the running program.

This is what I meant when I said the runtime “runs alongside” your own code.

The Swift Runtime

LLVM relies on the runtime ABI contract to define the memory address of _swift_allocObject:

000000000001c990 T _swift_allocObjectThis allows the Swift code to transition from our initial Memory() class initialisation, into SIL code with the alloc_refinstruction, through to a call into the runtime’s memory management system which actually allocates the object.

HeapObject

We can look at this implementation of _swift_allocObject in HeapObject.cpp to learn what’s really going on.

static HeapObject *_swift_allocObject_(HeapMetadata const *metadata,

size_t requiredSize,

size_t requiredAlignmentMask) {

assert(isAlignmentMask(requiredAlignmentMask));

#if SWIFT_STDLIB_HAS_MALLOC_TYPE

auto object = reinterpret_cast<HeapObject *>(swift_slowAllocTyped(

requiredSize, requiredAlignmentMask, getMallocTypeId(metadata)));

#else

auto object = reinterpret_cast<HeapObject *>(

swift_slowAlloc(requiredSize, requiredAlignmentMask));

#endif

::new (object) HeapObject(metadata);

// If leak tracking is enabled, start tracking this object.

SWIFT_LEAKS_START_TRACKING_OBJECT(object);

SWIFT_RT_TRACK_INVOCATION(object, swift_allocObject);

return object;

}The function takes arguments for metadata, heap size, and alignment. As we saw above, these parameters were generated by the compiler based on the $Memory type passed into alloc_ref.

Alignment is a critical concept in computer memory performance. In short, a 64-bit CPU works best when fed data in 64-bit chunks, where each memory address is a multiple of 64-bits. The requiredAlignmentMask assertion just ensures the object we’re allocating is compatible with this alignment. The mask looks something like “0xFFFFFFFF”.

SWIFT_STDLIB_HAS_MALLOC_TYPE is a flag defined in Config.h that checks the OS and platform to determine which memory allocation system call will be available.

On iOS, the object is instantiated with swift_slowAllocTyped to allocate the required heap memory for the object. auto object is the C++ approach for declaring a new variable with type inference. It’s just syntax sugar for writing the more familiar HeapObject* object = ….

The final instance of object is created with ::new (object) HeapObject(metadata). This used a placement new operator, allowing an instance of HeapObject to be instantiated at the pre-existing object memory address which we already allocated.

After some optional instrumentation setup, we finally return a pointer to this newly allocated object.

The struct itself is defined at HeapObject.h. This specifies the low-level memory representation of reference types in Swift. It stores reference count, alongside the isa pointer that references type metadata and class information.

You can read a solid technical explanation on the reference counting logic in the RefCount.h doc comments.

Heap & Syscalls

swift_slowAllocTyped is where the magic* happens: it calls into Heap.cpp, which does more memory alignment checks before carving out memory on the heap.

void *swift::swift_slowAllocTyped(size_t size, size_t alignMask,

MallocTypeId typeId) {

#if SWIFT_STDLIB_HAS_MALLOC_TYPE

if (__builtin_available(macOS 9998, iOS 9998, tvOS 9998, watchOS 9998, *)) {

void *p;

// This check also forces "default" alignment to use malloc_memalign().

if (alignMask <= MALLOC_ALIGN_MASK) {

p = malloc_type_malloc(size, typeId);

} else {

size_t alignment = computeAlignment(alignMask);

// Do not use malloc_type_aligned_alloc() here, because we want this

// to work if `size` is not an integer multiple of `alignment`, which

// was a requirement of the latter in C11 (but not C17 and later).

int err = malloc_type_posix_memalign(&p, alignment, size, typeId);

if (err != 0)

p = nullptr;

}

if (!p) swift::crash("Could not allocate memory.");

return p;

}

#endif

return swift_slowAlloc(size, alignMask);

}Based on platform, we call either swift_slowAllocTyped and malloc_type_malloc(), or swift_slowAlloc and malloc(). These are syscalls into the kernel’s memory management system.

malloc() reserves a chunk of process memory to store our HeapObject. The memory that makes up the heap comes from a virtual address space, an abstraction over the hardware RAM. In XNU, which iOS and MacOS use, this address space is implemented using a red-black tree.

*I hope, by now, you don’t think it’s magic anymore.

Once you understand it, dare I say it’s a little underwhelming? Q: How does Swift allocate objects? A: First it asks the kernel for some memory, then it creates a struct that stores some numbers and metadata.

I’ll have to replicate this meme to summarise runtime memory allocation.

swift_slowDeallocImpl can also be found in Heap.cpp. This deallocates memory with the free() syscall in the kernel.

static void swift_slowDeallocImpl(void *ptr, size_t alignMask) {

if (alignMask <= MALLOC_ALIGN_MASK) {

free(ptr);

} else {

AlignedFree(ptr);

}

}How Language Features Are Implemented

There is no magic at all when it comes to runtime memory management. It’s just code. Not even that much code!

To instantiate a reference type, the runtime performs syscalls into the kernel memory subsystem to allocate a block of memory. The runtime instantiates and returns a heap object which stores reference counts and points to type metadata.

If you mess up your unowned references, and try to access a deallocating HeapObject? The runtime handles exceptions too, terminating your app process through more syscalls.

void swift::swift_unownedCheck(HeapObject *object) {

if (!isValidPointerForNativeRetain(object)) return;

assert(object->refCounts.getUnownedCount() &&

"object is not currently unowned-retained");

if (object->refCounts.isDeiniting())

swift::swift_abortRetainUnowned(object);

}This is what I meant when I said we’d understand “how core language features are implemented”.

Conclusion

The Swift Runtime, a.k.a libswiftCore, is a C++ library that runs alongside all Swift programs. It’s dynamically linked to your app binary at launch and facilitates core language features.

That’s the definition we started with, but it was pretty handwavey. So I set out to understand what we mean by “runs alongside” and “facilitates core language features”.

The Swift Runtime runs alongside your code by coordinating with the compiler. The ABI specifies exactly what functions it contains, at which memory addresses. The compiler precisely places the function pointers required for runtime instructions in your compiled code.

The Swift Runtime facilitates core language features by, somewhat underwhelmingly, implementing them in code. This implementation generally boils down to storing metadata and wrapping syscalls.

Thank you for reading Jacob’s Tech Tavern. I hope you had fun on this spelunk into the Swift source code!

Enjoying Jacob’s Tech Tavern? Share it! Refer a friend as a free subscriber to unlock a complimentary month of full subscription.

Man… your articles are the most difficult and most exciting things I’ve come across lately! Thank you for the effort!!

I think there is a bit conflicted here for me that malloc() does not directly called to kernel's memory subsystem to allocate new memory, instead it called to heap management library of C.