A Tech Interview That Doesn't Suck

Spoiler alert: not LeetCode!

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every week.

Full subscribers unlock Quick Hacks, my advanced tips series, and enjoy my long-form articles 3 weeks before anyone else.

Hiring is hard. Damn hard.

The smaller the team, the higher the stakes. The right hires create leverage that helps everyone ship faster; bad hires drag productivity down for everyone.

The Tech Test — a practical assessment of your coding skills — is the beating heart of the software engineering interview loop. From the other side of the keyboard, it isn’t as stressful, but it’s tough to make a binary decision based on imperfect information — a brief look at your coding skills.

With dozens (or hundreds) of candidates in play, it’s understandable why most tech companies fall back on LeetCode to enforce a lowest common denominator of technical skills.

But I wanted to find a better way.

What makes a good Tech Test?

First, let’s agree on what we mean by a “good” tech test.

For candidates, it needs to be brief, not overly stressful, and give me the opportunity to demonstrate my skills.

For interviewers, it needs to effectively test for skills needed for the role, and allow us to appropriately level candidates.

Types of Test

I’ve run a lot of hiring loops in my time. There are a few common flavours — the take-home test; the theory interview; the live coding exercise. Let’s get this out of the way quickly: in the age of ChatGPT, take-home tests are a massive waste of time. Be better.

Theory interviews have their place, particularly at specialised or very senior roles, but I’m mainly trying to focus on the coding side here. In my opinion, a solid interview loop contains a bit of both: you can design your coding exercises as jumping-off points to go deeper into theory.

Live-coding exercises are really the only game in town when it comes to assessing coding skill, so let’s focus on optimising this.

There are 2 schools of thought when it comes to coding exercises:

Assess fundamental coding skills.

Test aptitude for day-to-day work.

Testing for fundamentals sounds, on paper, the ideal way to assess candidates. You want engineers that can solve problems, so give them a problem to solve.

In practice, however, this takes the form of an algorithmic interview, where the solution is some kind of data structure or algorithm. This rapidly descends into the highly game-able world of LeetCode, where candidates work out the answer because they have seen the question before.

I’ve found the most accurate positive hiring signals to come from live coding exercises that closely approximate day-to-day work. My favourite format looks a little like this:

“Here’s a very small codebase. There’s some stuff wrong with it, so we will pair-program for an hour to make it better.”

The Critical Path

A common issue with tech interviews is the critical path.

Let’s say your interview starts with a 403 HTTP error returning from an API call, due to a CORS issue. If you’ve handled this issue before, you might breeze through and look like a genius. If not, you’ll need to flex your problem solving muscles.

This can be a pretty nice approach — testing debugging and problem solving skills is essential — but if the rest of the test is inaccessible without solving the CORS issue, then you might be rejecting solid candidates* who just happen to be weak on browser security mechanisms.

*as well as subjecting yourself to an extremely awkward 45 minutes. Pulling the plug mid-interview is a valuable skill to buy back time for you both.

Unless the problem might reasonably be universal knowledge for seniors in your field, you want to avoid specific gotcha questions early on which candidates could fail by default.

This is the last time I’ll mention it, but that’s mostly why I don’t like LeetCode. The whole problem is a critical path — you’re blocked if you haven’t seen that specific dynamic programming problem before. We like to act like we’re inventing a novel solution, but it’s all kayfabe.

Open-ended test formats avoid a critical path, so candidates can’t get trapped in the sand-trap of a trivial knowledge gap. This also means you can stack the test with several types of problems and several levels of complexity. This allows more junior candidates pick and choose where to demonstrate their skills; while very senior candidates can demonstrate their mastery over your feeble attempts at gotcha questions.

That’s right — this approach to the tech test unlocks easy levelling.

There’s one final principle to consider: if you’re designing a test you’re going to be watching for 10 hours a week, it should be enjoyable for you to administer. Naturally, I themed this test around Bloodborne, my favourite FromSoftware game.

Designing The Test

Introducing… the non-crappy tech test.

I tried really hard to make the first half of this article tech-stack agnostic; but I’m returning to the comforting embrace of iOS for my worked test example.

If you’re not a gigantic iPhone stan, I hope you’ll still find value in how I implement the principles we discussed. If you can’t stand Apple, then instead feel free to hate-read Apple Is Killing Swift.

Every iOS technical test is essentially the same: “fetch data from an API and display in a table view”.

But there’s a lot of nuance we can layer onto this approach. For modern iOS roles, we’ll probably heavily involve SwiftUI and Swift Concurrency.

We want to give candidates the opportunity to demonstrate junior, mid-level, or senior competency, as well as demonstrating theory knowledge, debugging, and core problem solving skills.

How are we meant to test for theory? We work backwards: Contrive situations where the solution requires a theoretical understanding.

Let’s start building up the test.

Basic Networking & Debugging

Task 1: Fetch

Bossdata from the backend to display in the UI.

I’m fond of this question because it’s a pretty basic bit of problem-solving that’s universally applicable for any software engineering candidate.

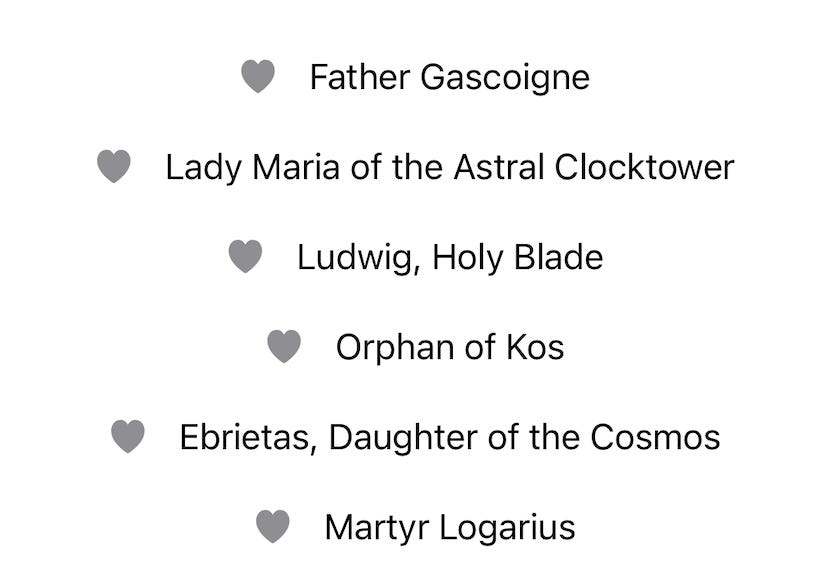

The main view at the start of the app displays a collection of boss monsters.

struct BossesView: View {

@ObservedObject var viewModel = BossesViewModel()

var body: some View {

let _ = Self._printChanges()

VStack {

ForEach(viewModel.bosses) { boss in

BossView(boss: boss, likes: $viewModel.likes)

}

}

}

}

Self._printChanges()is a private API which acts as a breadcrumb clue to help the candidate later in the test.

The view model contains pre-populated services, some @Published properties, plus a load() method.

final class BossesViewModel: ObservableObject {

@Published private(set) var greeting: String = ""

@Published private(set) var bosses: [Boss] = []

@Published var likes: [Boss: Bool] = [:]

@Injected(\.userService) private var userService

@Injected(\.bossService) private var bossService

func load() async {

do {

let user = try await userService.fetchUserInfo()

greeting = "Hello, \((user).firstName)"

bosses = try await bossService.fetchBosses()

} catch {

print(error)

}

}

}The interview will end very quickly if the candidate doesn’t know to call load inside a task or onAppear view modifier (one of the most basic SwiftUI techniques).

After seeing a crash, a candidate should look into the service and find an empty fetchBosses() function.

final class BossServiceImpl: BossService {

@Injected(\.authService) private var authService

private let bossURL = URL(string: "https://jacobsapps.github.io/tech-test/Resources/bosses.json")!

func fetchBosses() async throws -> [Boss] { fatalError() }

}They can implement a standard HTTP network request, and might run into a 401 Unauthorized HTTP error.

A solid candidate will set breakpoints (or print statements if they’re an O.G.) inside the service to read the error code and tell me they need an access token, which they’ll be able to find in authService.

This starter problem neatly allows candidates to flex their iOS networking and debugging fundamentals, where they should be able to call the API, notice the auth issue, slap a header on the HTTP request and decode the JSON without breaking a sweat.

func fetchBosses() async throws -> [Boss] {

let token = try await authService.getBearerToken()

var request = URLRequest(url: bossesURL)

request.addValue("Bearer \(token)", forHTTPHeaderField: "Authorization")

let data = try await URLSession.shared.data(for: request).0

return try JSONDecoder().decode([Boss].self, from: data)

}I’m being a bit of a hypocrite here — this first challenge is a critical path, since the candidate is blocked if they can’t get data into the app. However fetching data from an API is such a fundamental iOS skill that you can terminate the interview here and save time for everyone.

With the data fetched, the candidate is treated to this beautiful barebones layout. They’ll soon give it a lick of paint.

SwiftUI Skills

Task 2: Implement the design shown in Appendix #1.

When testing candidates on SwiftUI, we obviously need to check they can build and structure views. Fortunately, Apple defaults do a lot of heavy lifting, so it’s very quick to make a basic UI if you know what you’re doing — we can level candidates into basic VStack and HStack users vs. those who can easily apply .overlay(alignment:) , ButtonStyle, and AsyncImage.

If we were hiring for an advanced SwiftUI role, we might even create a design spec that requires PreferenceKey, AnchorPreference, or perhaps request some kind of accessibility work.

Task 3: Our view model is an @ObservedObject. Is this a problem?

After creating the design (and hopefully not spaffing away 75% of the allotted time), candidates can answer the age-old question: StateObject vs ObservableObject.

If they respond with the cop-out @Observable answer, you can retaliate by checking they understand how this works under the hood to change redrawing behaviour.

Task 4: Evaluate whether any unnecessary redraws are happening.

This more advanced task separates the wheat from the chaff. The answer requires a solid theoretical understanding of when redraws are triggered across SwiftUI views.

Since I’m being nice, I added let _ = Self._printChanges() into the body of my views (but you don’t have to be nice). This method allows you to debug why a view has redrawn. In this instance, tapping the <3 button in one BossView will trigger a redraw across all the sibling views.

BossView: @self, _likes changed.

BossView: @self, _likes changed.

BossView: @self, _likes changed.

BossView: @self, _likes changed.

BossView: @self, _likes changed.

BossView: @self, _likes changed.

BossView: @self, _likes changed.Leaving this clue out, and forcing devs to profile the problem, is also perfectly valid, but implementing the fix requires relatively strong understanding as well as decent refactoring skills.

Swift Concurrency Aptitude

Task 5: Address the concurrency warnings.

The way the original project is built has a common problem — warnings around updating UI from a background thread.

This simple threading problem serves as a nice warm-up to more advanced concurrency issues later on, but any level of candidate should be able to explain the problem.

The solution is to mark the view model, or the load() function itself, with the @MainActor attribute. We might also take the time to go slightly deeper on actors and ask whether await bossService() blocks the main thread while fetching data.

Task 6: Fetch User and Boss data in parallel.

Our initial implementation in load() contains a basic flaw, where the second fetch() call is blocked by the first.

func load() async {

let user = try await userService.fetchUserInfo()

greeting = "Hello, \((user).firstName)"

bosses = try await bossService.fetchBosses()

// ...Solving this displays aptitude for tools in Swift Concurrency aside from the basic async/await syntax, and candidates might come up with a various approaches to the problem.

Even better, this task serves as a natural segue into my favourite theory iOS interview question:

“Tell me about the tools available in Swift Concurrency, and when you might use them”

Now we get to the hardest concurrency question of the lot.

Task 7: The AuthService introduces a bottleneck. Diagnose the problem and ensure behaviour matches the sequence diagram in Appendix #2.

The basic auth service begins life looking like this:

actor AuthServiceImpl: AuthService {

var tokenTask: Task<String, Error>?

private let authURL = URL(string: "https://jacobsapps.github.io/tech-test/Resources/auth.json")!

func getBearerToken() async throws -> String {

print("Fetch auth token")

let token = try await fetchToken()

print("Auth token retrieved")

return token

}

}The print statements act as breadcrumbs to illustrate the bottleneck: the two API calls are separately calling and returning API tokens.

Fetch auth token

Fetch auth token

Auth token retrieved

Auth token retrievedIn a real production app, this might cause issues like higher latency, unnecessary load on your backend auth services, or even invalidated tokens and failed network requests.

I left a tokenTask property here as a clue, but this can easily be removed. The solution involves a solid understanding of the re-entrant nature of actors and how Task properties behave.

If you want to dive deeper into the inner workings of Actors, you can read Advanced Swift Actors: Re-entrancy & Interleaving.

To learn more about advanced use cases for

Task, my full subscribers can read Advanced Swift Concurrency or How to test a Task.

Devil’s Advocate

There’s a clear flaw with this tech test format: you’re largely testing for framework knowledge as opposed to skills.

The classic adage goes; a good engineer can pick up a language or framework, so it’s unfair to test for their ability with a tool.

This is absolutely true, however I’d argue it’s still the fairest format: if you were testing for performance optimisation, security skills, pure debugging, writing, or ARM assembly, you are biasing yourself towards candidates with a particular set of skills.

Testing aptitude with the most popular Swift language features and UI framework is probably the closest you can get to a fair test to assesses suitability for a job using those features and frameworks.

Furthermore, if you don’t bias a little bit towards a framework, you kneecap yourself since it’s harder to use a pre-made codebase. Forcing candidates to work “from scratch” subjects them to a ton of boilerplate, as well as making it hard to assess their aptitude for solving more interesting and difficult problems.

Conclusion

Hiring is hard. Damn hard.

I interviewed with Meta while job-hunting this year, and was interested to learn that they have the same technical bar from E3 (grad) to E6 (staff). Candidates get the same algorithmic exercises, and are held to the same pass/fail standard, with levelling is done entirely through measuring scope in other interviews.

While this is neat, and perhaps necessary if you’re a massive corporation, you can do so much better than a cookie-cutter algorithmic interview if you have influence on your own process.

Technical tests are the best way to check a candidate’s suitability for your job, but you need to think hard to get it right. The approach I’ve had the most success with is a live-coding exercise that has a candidate fix issues with a small codebase.

You can test candidates on problems with various levels of complexity, allowing them to demonstrate aptitude, as well as problem solving, debugging, speed, and theoretical knowledge.

You can try the test yourself on GitHub, or full subs can see the model answers and explanations in Solving The SwiftUI Interview.

I’ve written about tech hiring before, on the candidate side, in The D.E.N.N.I.S. system: Résumé tips for Senior Devs.

Happy hiring!

Some great ideas in here. As someone that came up with iOS screener questions recently, it’s very difficult to determine what to ask in a short period of time to form a boolean conclusion on the candidate.

Something I think we can all agree on is that coding interviews should resemble real-world conditions as much as possible. With that in mind, would you allow candidates to use AI chatbots or Copilot? How would that affect your judgment of them?

It feels like, as long as they come up with great code in a reasonable time and can demonstrate thorough understanding, then it should not matter how they get there. I would not prevent the candidate using Google or referring to documentation, or using an IDE's autocomplete, so why prevent access to another tool any productive developer ought to be using.

But I also feel like these new tools can be so good, it makes it harder for the interviewer to really get to the bottom of whether the candidate understands what they've done.