The Synchronization Framework in Swift 6

Performance testing the new concurrency primitives

The Synchronization Framework contains two low-level concurrency primitives — Mutex and Atomics. Today, we’ll understand why atomics and mutexes are useful, learn how to use them in your own code, and compare their performance to Actors.

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every week.

Full subscribers unlock Quick Hacks, my advanced tips series, and enjoy my long-form articles 3 weeks before anyone else.

Swift 6 and iOS 18 introduced the Synchronization framework, to surprisingly little fanfare. Seriously, barely even a shout-out at dub-dub. I have my work cut out for me.

Synchronization contains two low-level concurrency primitives — Mutex and Atomics.

These features could only be introduced with Swift 6 because they are implemented using the brand-new generic ownership mechanics.

Today, we’ll understand why atomics and mutexes are useful, and learn how to use them in your own code.

Finally, we’ll profile these primitives to understand their performance compared to the high-level synchronization mechanism in Swift Concurrency: Actors.

Synchronization and Ownership

Let’s check out the API signatures for Mutex and Atomic.

@frozen public struct Mutex<Value> : ~Copyable where Value : ~Copyable {

public init(_ initialValue: consuming sending Value)

}Mutex is ~Copyable, and generic over Value, another ~Copyable type. Mutex may also consume its initial value, if it’s noncopyable.

Swift is really getting away from me, I just had to spend 15 minutes working out what the

sendingkeyword did. Is Apple asking Gen Z to pick out keywords?It marks an argument as safe to pass across isolation domains, e.g. when passed into an actor from outside.

@frozen public struct Atomic<Value> where Value : AtomicRepresentable {

public init(_ initialValue: consuming Value)

}Atomic is generic over an AtomicRepresentable, and consumes any noncopyable Value with which it is initialised.

Noncopyable types and Consuming

Noncopyable types were introduced with Swift 5.9. These add Rust-style ownership semantics to Swift code, which offer performance gains for embedded systems programming by eliminating the runtime overhead associated with copying values.

It’s essentially a form of manual memory management.

All types in Swift are copyable by default. Think of it like an implicit protocol conformance to Copyable. This imaginary protocol defines the ability to copy the type’s a value or reference when passed around.

Value types can be declared noncopyable using the ~Copyable anti-conformance. Uh, apparently this is called a negative constraint.

Noncopyable explicitly marks value types which can’t be copied as part of variable assignment or function-argument-passing.

Function parameters marked consuming take ownership of noncopyable values and invalidate the original instance. Attempting to use the original value will result in a compiler error.

With Swift 6, noncopyable types now work with generics, allowing Mutex to work with any type.

We can see this negative constraint on its wrapped Value:

where Value: ~CopyableThis means that Value is not subject to the Copyable constraint. It actually removes the assumption that the value passed in is copyable, so the type inside the Mutex can be either copyable or noncopyable.

Mutex therefore works just as well when initialised with copyable values. In that case, the consuming function parameter doesn’t do anything; you’re still able to use the value elsewhere.

For more on copyable generics, check out Consume noncopyable types in Swift from WWDC 2024.

Now let’s look at these low-level primitives in detail.

Mutex

Mutex is an abbreviation of “mutual exclusion”.

Mutexes prevent access to shared mutable state by restricting access to one thread at a time. This is implemented by locking — the first thread accessing the mutex causes it to lock. Subsequent concurrent threads are blocked, waiting for the mutex to unlock access once the first thread is finished.

This guarantees that threads enjoy exclusive access to the value inside the mutex; much like you can enjoy exclusive access to Quick Hacks if you’re a paid subscriber to Jacob’s Tech Tavern.

Why are mutexes useful?

Let’s create a simple singleton cache in our code.

class Cache {

static let shared = Cache()

private init() {}

private var cache: [Int: Int] = [:]

func get(_ key: Int) -> Int? {

cache[key]

}

func set(_ key: Int, value: Int) {

cache[key] = value

}

}This code includes a common, dangerous risk: simultaneous access to the cache itself by multiple threads. It’s trivial to break this cache:

let cache = Cache.shared

await withTaskGroup(of: Void.self) { group in

for i in 0..<10_000 {

group.addTask {

cache.set(Int.random(in: 0..<100), value: i)

}

}

}This code immediately crashes out with EXC_BAD_ACCESS, with multiple threads calling set simultaneously to write to the dictionary.

Mutex uses a lock which protects your cache from unsafe simultaneous access. We can convert our cache to use the new Mutex:

import Synchronization

final class MutexCache {

static let shared = MutexCache()

private init() {}

private let cache = Mutex([Int: Int]())

func get(int: Int, key: Int) {

cache.withLock {

$0[key]

}

}

func set(key: Int) -> Int? {

cache.withLock {

$0[key] = int

}

}

}It looks largely identical, except our operations on the Mutex are wrapped in withLock to ensure we acquire a lock before touching the cache. This blocks threads which attempt concurrent access, until the Mutex unlocks.

The code in the closure is the “critical section” where mutual exclusion is enforced. Mutex also includes withLockIfAvailable, which attempts to acquire the lock but returns nil if it’s already locked.

extension Mutex where Value : ~Copyable {

public borrowing func withLock<Result, E>(

_ body: (inout sending Value) throws(E) -> sending Result

) throws(E) -> sending Result where E : Error, Result : ~Copyable

public borrowing func withLockIfAvailable<Result, E>(

_ body: (inout sending Value) throws(E) -> sending Result

) throws(E) -> sending Result? where E : Error, Result : ~Copyable

}These functions are borrowing, which means they gain read-only access to self. They borrow ownership of the Mutex and mutate the inout parameter Value.

I’ll do a proper piece on practical Swift ownership once I get my hands on a 264kB RAM Raspberry Pi Pico.

The Critical Section

If you are using Mutex in your projects, the most important thing is to keep the critical section in the withLock closure small, so competing threads aren’t blocked for too long.

Avoid using complicated O(N²) algorithms in this critical section.

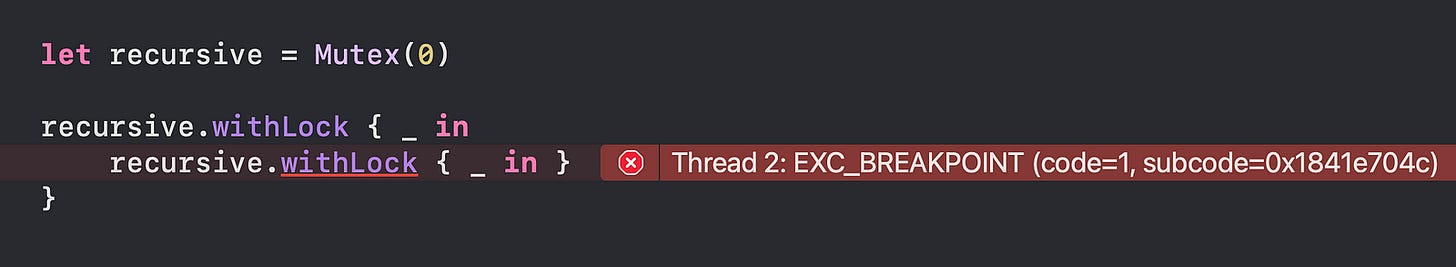

Well; maybe that’s the second most important thing: the most important is probably to avoid recursive calls to withLock. This will crash on Apple platforms, but it’s technically undefined behaviour and platform-dependent. You might just end up deadlocking your process.

Mutex vs Actors vs OSAllocatedUnfairLock

Even today, there’s a lot of controversy in the iOS community as to how to spin up a cache. The modern high-level approach might be an actor:

actor ActorCache {

static let shared = ActorCache()

private init() {}

private var cache: [Int: Int] = [:]

func get(_ key: Int) -> Int? {

cache[key]

}

func set(_ key: Int, value: Int) {

cache[key] = value

}

}If you’re on Apple platforms, you’ll have access to OSAllocatedUnfairLock, a low-level locking mechanism evolved from the classical os_unfair_lock.

import os

final class LockCache {

static let shared = LockCache()

private var cache: [Int: Int] = [:]

private let lock = OSAllocatedUnfairLock()

func get(_ key: Int) -> Int? {

lock.lock()

defer { lock.unlock() }

return cache[key]

}

func set(_ key: Int, value: Int) {

lock.lock()

defer { lock.unlock() }

cache[key] = value

}

}With all these options, what should we be defaulting to? Let’s benchmark the three approaches to help inform our decision.

Performance Testing

Let’s measure performance writing to a cache with lots of contention:

// Random list of 10m cache indexes containing 0-50

let indices = (0..<10_000_000).map { _ in Int.random(in: 0..<50) }We’ll create 10 million concurrent operations with a taskGroup and write to each cache.

To track execution time, I’m using DispatchTime and uptimeNanoseconds. I’m capable of learning my lesson.

func logExecutionTime(_ log: String, block: () async -> Void) async {

let start = DispatchTime.now()

await block()

let end = DispatchTime.now()

let diff = Double(end.uptimeNanoseconds - start.uptimeNanoseconds)/1_000_000_000

print(log, String(format: "%.3f", diff))

}Finally, we need to come up with a way to run our code in parallel to ensure a lot of contention. This runs the 10m tasks in parallel, evenly distributed across all active CPU cores:

func processAcrossCores(

name: String,

total: Int = 10_000_000,

operation: @escaping (Int) async -> Void

) async {

await logExecutionTime(name) {

await withTaskGroup(of: Void.self) {

let cores = ProcessInfo.processInfo.activeProcessorCount

let tasksPerCore = total / cores

for i in 0..<cores {

$0.addTask {

let start = i * tasksPerCore

let end = (i + 1) * tasksPerCore

for j in start..<end {

await operation(j)

}

}

}

}

}

}A special shoutout to Dave Poirier who pointed out the problem with my initial approach—I created 10m tasks in a taskGroup, leading to most of the running time being taken up by concurrency-related overhead rather than the actual locking mechanisms.

Here’s the code I used, you’re welcome to give it a try yourself.

import Synchronization

let mutexCache = MutexCache.shared

let actorCache = ActorCache.shared

let lockCache = LockCache.shared

for total in [

1000,

10_000,

100_000,

1_000_000,

10_000_000

] {

// Control to test the time for 10m 'empty' operations

await processAcrossCores(name: "Control", total: total) { _ in }

await processAcrossCores(name: "Actor", total: total) { index in

await actorCache.set(indices[index], value: index)

}

await processAcrossCores(name: "Lock", total: total) { index in

lockCache.set(indices[index], value: index)

}

await processAcrossCores(name: "Mutex", total: total) { index in

mutexCache.cache(int: indices[index], key: index)

}

}Here’s the results, in seconds, tabulated for your pleasure.

| # items | Mutex | Actor | Lock | Control |

|-------------|---------|---------|---------|---------|

| 10 million | 6.334 | 8.318 | 4.415 | 0.328 |

| 1 million | 0.574 | 0.831 | 0.435 | 0.034 |

| 100k | 0.045 | 0.080 | 0.045 | 0.004 |

| 10k | 0.006 | 0.008 | 0.004 | 0.001 |

| 1000 | 0.001 | 0.001 | 0.001 | 0.000 |Performance was not drastically different between these approaches — especially between Mutex and Locks*, which had very similar speeds with under 1m operations.

*erm, as it happens, Mutex is actually implemented using os_unfair_lock on Apple platforms.

In this very simple, slightly contrived, caching example, Mutex was about 25% faster than Actors. However, I can’t tell you whether to use Mutexes instead of Actors. There are clear trade-offs you must consider between these approaches when making your own decision.

Actors are safer: Swift Concurrency ensures all threads make forward progress, making it immune to deadlocks, whereas Mutex inherently blocks threads.

On the other hand, Mutex (and OSLock, if pre-iOS-18) frees you from the tyranny of async API. This makes it easier to use, and avoid the potential performance foot-gun lurking under each await (some have called these re-entrancy mines).

They’re both basically fast so, as always, I recommend you profile your own code and understand your own context to discover what’s best for your project.

Now let’s look into the other concurrency primitive provided by the Synchronization Framework: Atomic.

Atomic

“Atom” comes from the Greek “atomos”, meaning “indivisible” (uh, about that…). Atomic operations are executed as a single unit, completed without the possibility of interruption.

If you’re familiar with ACID databases, you’ll know atomic operations: transactions running without interruption, completed as a single unit, and where it’s impossible for multiple transactions to modify the same rows simultaneously.

Why are atomic operations useful?

Let’s look at a classic concurrency example — incrementing a number (lots and lots and lots of times, in parallel).

var num = 0

await withTaskGroup(of: Void.self) { group in

for _ in 0..<1_000_000 {

group.addTask {

num += 1

}

}

}

print(num) // 997954While we expected to count to 1 million, we only reached 997,954.

The += operation might seem unassuming, but it actually includes a few low-level instructions that could easily get out-of-order with so many threads pipelining instructions in parallel:

Load the current value of

numfrom memory, into a CPU register.Perform an arithmetic operation: add

1tonum.Write the new value of

numfrom a CPU register, back into memory.

If you aren’t comfortable with CPUs, Registers, instructions, and pipelining, I have a great primer in Through the Ages: Apple CPU Architecture

This is a classic data race.

In a multi-threaded system, there is no guarantee that these operations will happen in-order between each thread. The read operation of Thread #2 might happen before the write operation of Thread #1. This effectively means that one of their increment operations will be missed from the total.

Atomics are very low-level operations: often backed by the hardware itself as opposed to the relatively high-level operating system construct of locks.

Using an Atomic

Using an Atomic in the Synchronization framework forces these three steps to run together as a contiguous block, and prevents other threads from operating on num until the current operation is done writing.

Let’s see how this looks in code:

import Synchronization

let atomic = Atomic(0)

await withTaskGroup(of: Void.self) { group in

for _ in 0..<1_000_000 {

group.addTask {

atomic.add(1, ordering: .relaxed)

}

}

}

print(atomic.load(ordering: .relaxed)) // 1000000Because all the operations possible on an Atomic value are, well, atomic, we don’t drop a single addition, and handily count to 1,000,000.

Memory Ordering

If you aren’t familiar with C++ memory ordering semantics*, the ordering property on the add and load operations might appear arcane.

*if I just inadvertently triggered your imposter syndrome, I’d like to make it clear that I had no idea what memory ordering semantics were prior to writing this article.

These specify how the operations synchronise memory. The ordering options form a hierarchy which defines the strictness of the synchronisation mechanism.

Relaxed guarantees the operation is atomic, but adds no constraints on any other access to the atomic value.

An acquiring update syncs up with the releasing of a previous atomic operation — ensuring that atomic operations following acquire in your code can’t be executed before this operation.

A releasing update syncs up with subsequent acquiring operations — ensuring operations before the release in your code can’t be executed after this operation.

An acquiring-and-releasing operation applies both the above

acquiringandreleasingconstraints.Sequentially consistent operations give the strongest synchronization guarantee: they apply the acq-rel ordering and additionally ensure that all threads are supplied with sequentially consistent operations in the same order.

These trade off between synchronization guarantees and performance, depending on the level of isolation you might want in your program.

AtomicRepresentable

Atomics have a separate atomic storage representation. AtomicRepresentable protocol conformance declares a type which has this storage.

Your own types can conform in two ways:

Using a

RawRepresentabletype where the RawValue is a standard libraryAtomicRepresentabletype such asInt.Defining conformance yourself by defining encoding and decoding for the type’s

AtomicRepresenation, which itself needs to resolve to anAtomicRepresentabletype.

public protocol AtomicRepresentable {

associatedtype AtomicRepresentation : BitwiseCopyable

/// Destroys a value of `Self` and prepares an `AtomicRepresentation` storage

/// type to be used for atomic operations.

///

static func encodeAtomicRepresentation(_ value: consuming Self) -> Self.AtomicRepresentation

/// Recovers the logical atomic type `Self` by destroying some

/// `AtomicRepresentation` storage instance returned from an atomic operation.

static func decodeAtomicRepresentation(_ storage: consuming Self.AtomicRepresentation) -> Self

}Atomic Operations

Atomics are low-level constructs, meaning their actual implementation is very close to the metal — instructions etched directly into the ALU chips. Consequently, the base AtomicRepresentable types and operations you can perform are pretty restricted to basic arithmetic.

extension Atomic where Value == Int {

public func wrappingAdd(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func wrappingSubtract(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func bitwiseAnd(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func bitwiseOr(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func bitwiseXor(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func min(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func max(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func add(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

public func subtract(_ operand: Int, ordering: AtomicUpdateOrdering) -> (oldValue: Int, newValue: Int)

}Atomics vs Actors (vs Mutex)

Let’s see whether Atomic operations are all they’re cracked up to be by benchmarking their various forms of synchronisation against Actors and Mutex.

To protect a simple incrementation operation from concurrent access to its state, actors only need a few lines of code.

actor Incrementor {

var val = 0

func increment() {

val += 1

}

}Now let’s compare performance of atomics vs actors when counting up to 10 million.

let atomic = Atomic(0)

await processAcrossCores(name: "Relaxed atomic",

total: 10_000_000) { _ in

atomic.add(1, ordering: .relaxed)

}

print(atomic.load(ordering: .relaxed)) // 10,000,000

let actor = Incrementor()

await processAcrossCores(name: "Actor counting") { _ in

await actor.increment()

}

print(await actor.val) // 10,000,000

let mut = Mutex(0)

await processAcrossCores(name: "Mutex counting") { _ in

mut.withLock {

$0 += 1

}

}

mut.withLock { print($0) } // 10,000,000Here’s the performance, in seconds, for counting to 10 million:

Atomic (relaxed) 7.77

Atomic (acquiring) 8.29

Atomic (releasing) 8.01

Atomic (acq-rel) 7.97

Atomic (seq-con) 7.66

Actor 7.51

Mutex 3.65Performance between Actors and Atomics were pretty close—it was a little strange that the restrictiveness of atomics had little impact on the speed of operations, but I’ll chalk that up to the fact that we were performing such a simple arithmetic operation.

The real standout here was Mutex blowing the other two approaches out of the water, counting to 10m in less than half the time of the others.

What you should take away from this is that you shouldn’t immediately reach for sequentially-consistent Atomics as a shiny new panacea to make your synchronisation code run 2 milliseconds faster than an actor otherwise might — unless you have a clear use case, the higher-level tools are usually fine.

Conclusion

The Synchronization framework in iOS 18 introduces Mutex and Atomics, low-level primitives to guarantee thread-safe operations. They’re implemented using the generic ownership mechanics introduced with Swift 6.

For some reason, I was also really invested in giving you the etymology behind Atomic and Mutex, but I guess by now you’d find it weird if I didn’t go off on some tangent or other.

These new primitives aren’t likely to be useful to Apple platform devs on the daily. From the Swift Evolution proposal: “Our goal is to enable intrepid library authors and developers writing system level code to start building synchronization constructs directly in Swift.”

Unless you’re writing extremely high performance, down-to-the-bit code, the speed difference you’ll enjoy from using low-level primitives isn’t always the most important factor, and isn’t even guaranteed. When in doubt, high-level tools like actors are safe and work as a reasonable default.

Don’t just reach for shiny new primitives because “they’re faster” / “they’re lower-level” — use the best tool for the job, when appropriate. You won’t know the best tool for the job because a weird beer-themed internet newsletter told you about them. Profile your damn code!

Hi Jacob, I have some comments!

First, locks/mutex do make forward progress. Although they can block a thread, the thread holding the lock makes forward progress and is not waiting on future async work, so the whole thing makes forward progress. This is not the case with semaphores which can be signaled from any thread. That's the big difference.

About your performance tests:

- to be fair to atomics and mutex, I would recommend switching to a synchronous operation in processAcrossCores(), the async operation has a lot of potential overhead

- you can use atomic.wrappingAdd() instead of add() as the latter generates slower code

- you can replace ProcessInfo.processInfo.activeProcessorCount with the actual number of performance cores on your machine, that might give you more interesting results

First, thank you for the in-depth view of Mutex & Atomic.

Just one fix: it looks like the get and set signatures in your cache example are swapped:

get should be:

get(key: Int) -> Int?

set should be:

set(int: Int, key: Int)

Thank you again for the great writing.

P.S. The issue is in the MutexCache

final class MutexCache {

...

func get(int: Int, key: Int) {

cache.withLock {

$0[key]

}

}

...