Customised client-side AI with Apple's CLIP models

Perform Core ML magic, entirely offline

Client-side AI has come a long way since 2017, when Apple released Core ML 1.0. Long before Foundation Models, Core ML has been quietly maturing into a powerful tool for those with the know-how to use it.

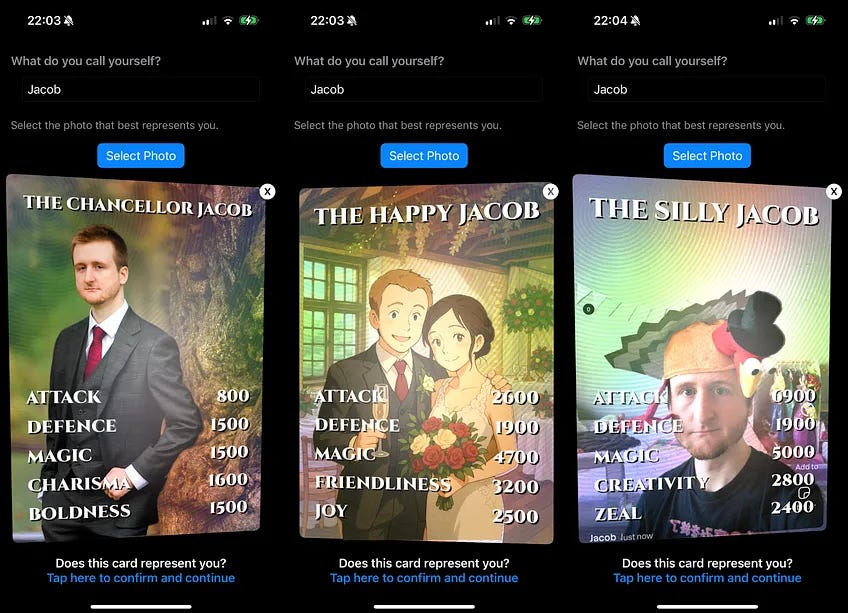

Last week, I unleashed Summon Self to the world, which allowed you to create trading cards out of your friends. These had fitting names and stats powered by customised client-side CLIP models. These models were open-sourced by Apple and perform zero-shot image classification on your photos to create fitting names and stats.

This tech simply didn’t exist until recently.

Today, we’re going to learn full-stack client-side AI:

Finding the right model for your use case

Downloading the open-source model

Customising the model using Python scripts

Embedding models in your app

Using the Vision framework to process results

These surprisingly simple techniques focus on CLIP, but apply to any Core ML model. From today, you’ll unlock powerful AI features in your own apps while paying $0 for servers.

Apple’s AI Models

Apple has a plethora of oven-ready Core ML models available for you to use in your apps. These include:

FastViT: an image classification model

YOLOv3: an object detection model

Depth Anything V2: a depth estimation model*

*at some point I want to turn this into a spectral ghost camera

But this only scratches the surface. Apple’s most interesting set of models live on HuggingFace, the community for ML professionals and enthusiasts to host and share their own models and training data sets.

Included in this list are the MobileCLIP models, Apple’s own implementation of OpenAI’s CLIP neural network, optimised for Core ML and iOS.

CLIP Models

CLIP, or Contrastive Language–Image Pretraining, is a neural network developed by OpenAI that connects images with text. It trains on giant datasets of text-image pairs scraped from the internet.

The CLIP neural network trains a pair of twin models: an image encoder and a text encoder. These are taught to map images and text into a shared “embedding”; effectively creating vectors representing a point in 512-dimensional space.

This is a form of zero-shot image classification, meaning the trained model can classify images and text it has never seen before and get pretty good results.

Apple’s MobileCLIP models are trained using the DataCompDR training data, which has a relatively small number (12 million) of high-quality image-text pairs, allowing for a fast, relatively small model to be produced. This is useful for efficient performance on mobile devices.

Actually, there are 4 MobileCLIP model pairs Apple created, which vary in size and accuracy. S0, S1, S2, and B(LT).

Frankly, however, I have only got great results with the largest model, MobileCLIP B(LT). This powered the card naming and stats in Summon Self, my latest indie project. I combined it with Vision processing and SwiftUI Metal shaders to mint trading cards you can collect and trade with your friends. Read my full write-up here.

MobileCLIP B(LT) works like magic, but also means you are saddling yourself with a 173MB image processing model. This means 95% of the app size of Summon Self was taken up by this model!

First, I’m going to demonstrate how to generate text embeddings on your computer running a simple Python script with the Text model. Next, we’re going to use the Vision framework to process images and classify them on-device.

If you also bundle the text models, you can introduce user-customisable categories that generate text embeddings on-the-fly. One of the most exciting applications of this is Image Search, where text embeddings match with the closest images in real-time as a user types what they want to see.

Once you know how to do this with MobileCLIP, you can apply the exact same technique to all Apple’s free Core ML models, unlocking powerful client-side AI features in your own apps.

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every week.

Paid subscribers get full access to this article, plus my full Quick Hacks library. You’ll also get all my long-form content 3 weeks before anyone else.

Not sure? How about a 2 week test-drive?