How I Stole Your ChatGPT API Keys

Security fundamentals for the front-end

Subscribe to Jacob’s Tech Tavern for free to get ludicrously in-depth articles on iOS, Swift, tech, & indie projects in your inbox every week.

Full subscribers unlock Quick Hacks, my advanced tips series, and enjoy my long-form articles 3 weeks before anyone else.

The AI gold-rush is in full swing.

And in a gold rush, baddies will steal shovels.

One deadly mistake is commonplace: bundling ChatGPT API keys directly into your shiny new AI app. This makes it trivial to steal your keys, burn through your credits, and rack up painful bills.

Today, I’ll explain a fundamental Security Law:

Don’t store API keys on the client.

Like, ever.

Today, we’re looking at the two main ways bad actors can sniff out your API keys; then we’ll learn how you can avoid this fate.

Decompiling Your App

If you’re storing API keys on your device, you might employ various protections mechanisms, with varying levels of sophistication. I’ll go through the most popular approaches in detail, and demonstrate how easy they are to circumvent.

Raw Strings in code

This is usually the first approach engineers take, prior to becoming securitypilled. When requesting data from an API, you’ll write something like this:

func callChatGPTAPI(prompt: String) async throws -> Data {

let url = URL(string: "https://api.chatgpt.com")!

var request = URLRequest(url: url)

let apiKey = "12345678-90ab-cdef-1234-567890abcdef"

request.addValue(apiKey, forHTTPHeaderField: "Authorization")

return try await URLSession.shared.data(for: request).0

}This code is compiled and delivered to users in the form of an .app bundle, containing all the executable code.

I’ve written before about how easy it is to get access to the .ipa file of any app on the App Store, which contains this .app bundle. This is the first port of call for bad actors looking to steal your keys.

If you want some light reading, you can check out Impress at Job Interviews by Inspecting their App Bundle now!

By following this approach, it’s trivial to get into the .app bundle. It’s just a folder, which looks a little like this:

The real juice lies inside Bev, the UNIX executable for my trusty, boozy side project. This contains all the compiled and linked code for my app.

With a built-in UNIX command, strings, we can inspect every raw string compiled into this executable.

strings BevNaturally, the output is pretty verbose:

As well as raw strings, you’ll also see a ton of symbol names for your compiled functions.

But near the bottom of this list, we see our prize:

12345678-90ab-cdef-1234-567890abcdefThe raw string for our API key, out in the open — easily searchable by any villain handy with a regex.

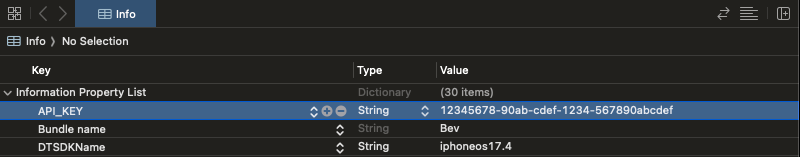

Info.plist

You might think to yourself, okay, I’m not going to put raw strings into my app where they can be easily decompiled.

So I’ll put them in the Info.plist where they’re meant to be.

Your API call site becomes a little more complex to retrieve this value.

func callChatGPTAPI(prompt: String) async throws -> Data {

let url = URL(string: "https://api.chatgpt.com")!

var request = URLRequest(url: url)

let apiKey = getAPIKeyPlist()

request.addValue(apiKey, forHTTPHeaderField: "Authorization")

return try await URLSession.shared.data(for: request).0

}

func getAPIKeyPlist() -> String? {

Bundle.main.object(forInfoDictionaryKey: "API_KEY") as? String

}There are a few sub-levels of sophistication here.

Rather than directly hardcoding keys, you might have multiple sets of keys, for development and prod. You can create

.xcconfigfiles for each environment, which resolve to values in theInfo.plistat build-time.If you’re a true security zealot, you’ll know never to commit secrets into source control. Perhaps you store your API keys securely on your CI/CD pipeline, injecting them into

.xcconfigorInfo.plistas part of your build scripts.

But all this is for naught.

Let’s take one more look at that .app folder, available to anyone with a MacBook, an iPhone, and too much time on their hands:

Ruh-roh.

Regardless of how they find their way into the Info.plist, these values are publicly available.

Obfuscated Strings

If you listen uncritically to everything you read on the internet, you might think you’re slick by obfuscating your strings. That’s certainly what I thought in 2019 when I first decided to be the resident security expert.

You can get very fancy with your obfuscation algorithm and your salting, but let’s be real: you’re going to ask ChatGPT to write a pair of functions to obfuscate and decode your keys. You might as well use up those credits before they get nicked.

private func obfuscate(key: String) -> String? {

let salt = "00000000-0000-0000-0000-000000000000"

guard let keyData = key.data(using: .utf8),

let saltData = salt.data(using: .utf8) else {

return nil

}

let obfuscatedData = zip(keyData, saltData).map { $0 ^ $1 }

let obfuscatedBase64 = Data(obfuscatedData).base64EncodedString()

return obfuscatedBase64

}

private func decode(obfuscated: String) -> String? {

let salt = "00000000-0000-0000-0000-000000000000"

guard let obfuscatedData = Data(base64Encoded: obfuscated),

let saltData = salt.data(using: .utf8) else {

return nil

}

let apiKeyData = zip(obfuscatedData, saltData).map { $0 ^ $1 }

return String(bytes: apiKeyData, encoding: .utf8)

}Getting the key at runtime is as simple as decoding the raw obfuscated string.

func callChatGPTAPI(prompt: String) async throws -> Data {

let url = URL(string: "https://api.chatgpt.com")!

var request = URLRequest(url: url)

let apiKey = getAPIKeyObfuscated()

request.addValue(apiKey, forHTTPHeaderField: "Authorization")

return try await URLSession.shared.data(for: request).0

}

func getAPIKeyObfuscated() -> String? {

decode(obfuscated: "AQIDBAUGBwgACQBRUgBTVFVWAAECAwQABQYHCAkAUVJTVFVW")

}While it’s a little harder to read than raw strings, it’s still straightforward for a sufficiently motivated hacker to steal your keys.

If you use an insecure approach such as base-64 encoding or XORing, it’s trivial to reverse engineer. Even with a decent algorithm and salt, attackers can use tools like Frida to extract your API keys from device memory at runtime.

Obfuscation provides security by obscurity, but this isn’t an effective approach. No matter how good your obfuscation is, that API key will eventually be decoded and sent across the network.

This leads us to the second, potentially far deadlier, method of stealing API keys.

Monitoring Network Traffic

This is one of the most powerful techniques at your disposal.

Free tools like Proxyman (or Charles Proxy if you’re an Original Gangster) allow you to inspect all the network I/O for an app or website.

Within seconds of loading the program, we immediately spot the auth header containing our API key in a network request.

12345678-90ab-cdef-1234-567890abcdefBecause I’m strange, this is something else I like to do when I’m about to start a new job: inspect some network traffic to find out whether they’re a REST shop or a GraphQL house.

Mitigating Proxying

It’s possible to use techniques such as SSL pinning to mitigate this attack vector. SSL pinning checks the TLS certificates presented by backend servers, to verify they match certificates bundled on-device. This prevents man-in-the-middle attacks and blocks the proxy root certificate of network traffic monitoring tools like Proxyman.

To implement SSL pinning on iOS, you can create a network request decorator with URLSessionDelegate that implements urlSession(didReceive: URLAuthenticationChallenge). You can then use the Security Framework’s SecTrustCopyCertificateChain to compare the SSL certificate with your pinned copy.

But, you guessed it, SSL pinning isn’t necessarily all it's cracked up to be. Reverse-engineers can use tools to bypass pinning, trivially when using jailbroken devices.

Plus, when you don’t know exactly what you’re doing, SSL pinning can be risky.

When I was a relatively junior engineer with a lot to prove, I naïvely implemented SSL pinning against the Cognito root certificate owned by AWS.

Naturally, AWS rotated the certificate that same week.

To make a long story short, the UK’s COVID-19 testing centres became inoperative for the longest 59 minutes of my life.

How can I protect my app?

I hope by now you’ve internalised our main lesson: protecting client-side API keys is largely security theatre. Your API keys will never be perfectly secure if you’re bundling them into your publicly-distributed app.

Now that we know what not to do, what can we do?

While the client-side is inherently insecure, it’s far more straightforward to keep your backend safe from bad actors. The “textbook” approach to securing third-party API keys is to place them into a (rotating) secrets manager, and proxying network requests via your backend.

With this approach, you can authenticate network requests using your own systems and narrow down the potential attack vector.

If you aren’t quite rolling your own infrastructure yet, Firebase Cloud Functions offer a simpler way to implement this middleware layer.

While we’re at it, you can add a few more layers of protection to your device through tools like AppAttest on iOS, Play Integrity on Android, and by implementing RASP (runtime application self-protection) libraries. These stop your app from running on jailbroken devices, and protect against reverse-engineering tooling.

A small warning: if you’re rolling Firebase, I’ve personally had big problems with AppAttest. Maybe they’ve fixed it, but as a Brit I will never pass up an opportunity to grumble.

If you’re keen to learn more about protecting your app at runtime, tune in next week for an article exclusive to my paid subscribers: Jailbreak Protection on iOS.

Conclusion

Don’t store API keys on the client.

Like, ever.

Anybody telling you that your keys are safe on the client are either foolish or malevolent.

Anything bundled into your app can be inspected. API keys in raw strings and Info.plist files are trivial to find, and more elaborate approaches like obfuscation are still vulnerable to motivated attackers or jailbroken devices.

Network proxying is a powerful tool for both good and evil. Approaches like SSL pinning can help protect against proxies, but may prove more trouble than they’re worth if you don’t know what you’re doing.

Client-side protection mechanisms such as app integrity checking or reverse-engineering-detection libraries can help to layer protection onto your app or website.

If you want to keep your API keys (reasonably) safe, you want to store everything on the backend, or use products such as Firebase Cloud Functions. You can sleep easy knowing your API keys are secure. Probably.

Jacob’s Tech Tavern accepts no liability if you rack up a $5,000 ChatGPT invoice or Firebase bill after following my advice.

I have a question. What about the Firebase plist? Is it fine to leave it in the main directory?

This is awesome, I’m writing a blog about Arkana and how to secure your secrets.

Just back from sick leave and will finalize it and send to you 🙏🏻🤞🏻