The Great Connection Pool Meltdown

A war story from the frontlines at Tuist

Welcome to my first edition of War Stories, where I partner with famous builders in the iOS space to share real-life stories from the frontlines as they scale their projects.

Today I’m working with Pedro Piñera Buendia, the creator of Tuist, which is designed to fix the problems shared by iOS teams as they scale Xcode projects. I have not been paid to endorse Tuist, but I do really like it.

I’m still working out the format here, so please be patient whenever I accidentally switch between first and third person. It’s part narrative, part interview, all swashbuckling fun.

To catch all my War Stories, be sure to subscribe:

Without further ado, here’s The Great Connection Pool Meltdown.

Hard Things

Like many readers of Jacob’s Tech Tavern, the Tuist guys are iOS developers turned founders. Web infra at scale abruptly switches from an interview buzzword to cold reality. This is one of those Hard Things™ that we are often shielded from when painting JSON on the client-side.

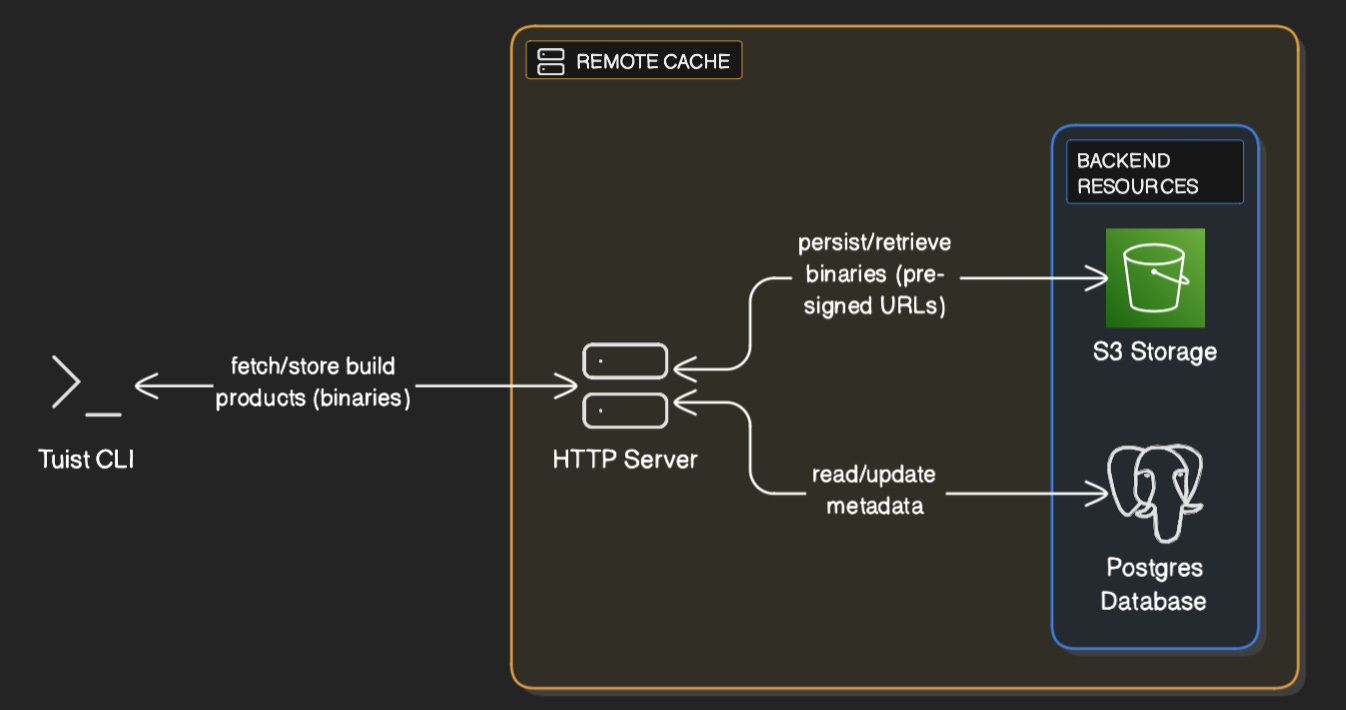

One of Tuist’s core features is caching. It builds xcframeworks from each module and caches them remotely, which dramatically cuts cold build time for Xcode projects, both locally and on CI (Tuist newly integrates with Xcode 26 Compilation Caching to make it even faster).

To power a cache, you need a server. The Tuist caching server is coded in Elixir, which compiles down to Erlang bytecode and runs on the Erlang runtime. It’s famous for being great at building highly parallel, real-time services.

Tuist’s code is actually open-source if you’re interested.

The HTTP cache server processes traffic from organisations with hundreds of devs, with bursts of traffic every time a project is freshly generated. This can rack up ~100k requests per hour. Given an average xcframework module can be 5-10 MB, adds up to (approximately) a metric f*ckton of traffic.

This was all well and good, but strange errors began 6 months ago.

The iOS Dev’s Blind Spot

We are coddled by the Apple ecosystem. URLSession does a lot under the hood like HTTP/2 multiplexing, interfacing with system daemons that manage the radio, and maintaining an internal TCP connection pool.

This stuff will make a pretty good article actually, I’ll add it to my list.

Building on the server means you must keep TCP connections warm using a connection pool, or your users will suffer cold starts, leading to slow response times.

A caching server is a high-throughput environment, but when you’re linked to CI builds, the access pattern is actually very spiky. Every organisation onboarded to Tuist adds thousands of sporadic requests to the server as devs sprint to generate projects and merge PRs.

This leads to unpredictable surges in traffic on the server. These could queue up thousands of operations waiting to be executed, from DB queries to Postgres (fetching cache metadata), to external GET requests to Amazon S3 storage (where the xcframeworks are stored).

Each millisecond of latency counts on a cache server. You can optimise your Elixir code as much as you like, but there’s no getting around “fixed costs” like disk I/O on the database or the network latency when fetching from external storage.

If you can serve these requests in time, great.

If not, bad things can happen.

The sh*t hits the fan

The early warning signs were subtle at first.

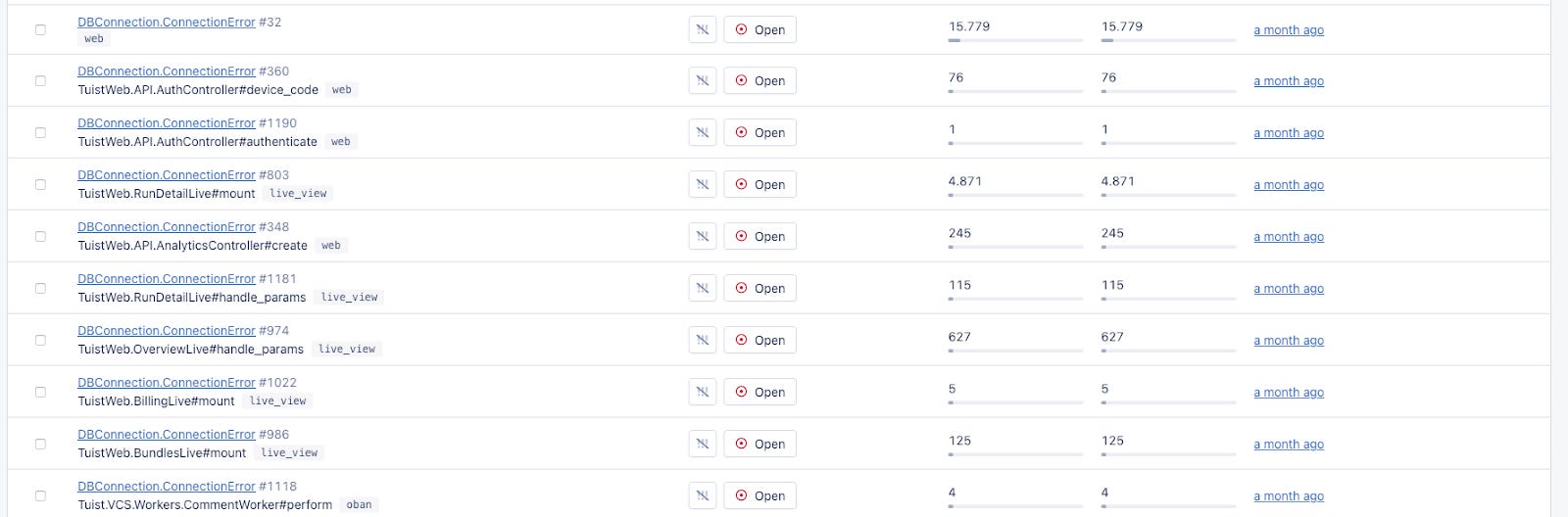

Daily connection drop warnings were flagged from the server, because they sat in the queue too long. Users weren’t complaining, since the client would just retry failed connections, but it was annoying to begin every workday like this:

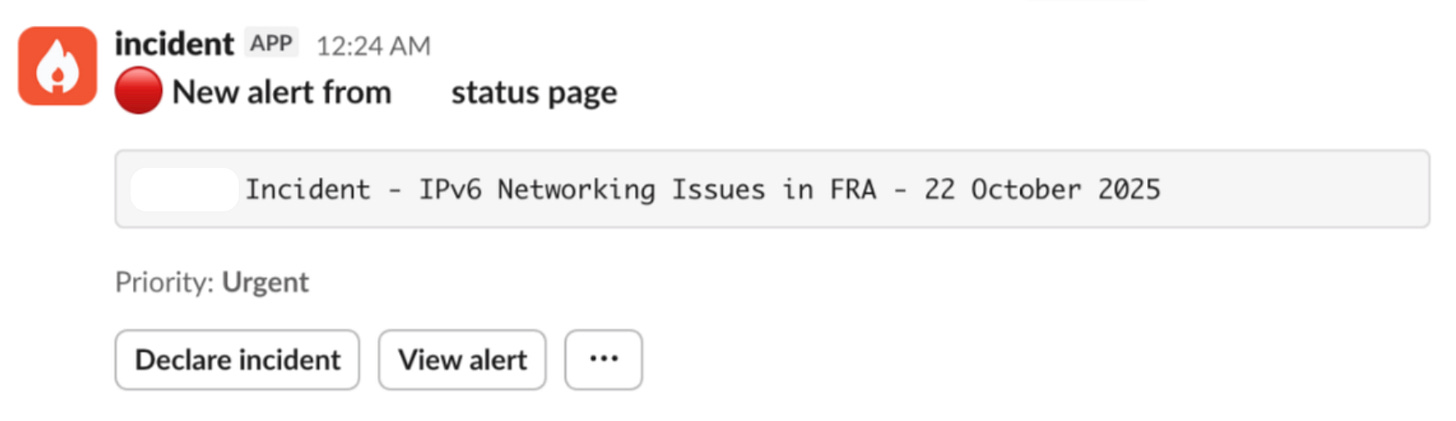

The team began to talk to their cloud provider (a popular wrapper who will remain nameless) about potential network issues in their caching server instance, but they would mostly push back and blame Pedro’s config.

The cloud provider doth protest too much, methinks.

One day, things got real bad. Contagion. The network issue spread out across the database provider and their S3 storage, proving it had nothing to do with app configuration.

All of a sudden, the server crashed.

It was a particularly high-traffic spike that toppled the service. The Tuist guys spun up the server again. It went down again.

The cloud provider’s network issue was failing to process many of the S3 requests that fetched cached xcframeworks. The failing requests sat around in the queue, waiting to retry. This hogged connection pool slots, wasted CPU cycles, and rapidly filled up application memory until the server crashed again.

The CLI client kept retrying with exponential backoff, so the server immediately got hit with thousands of requests each time it recovered.

At best, users didn’t notice much, because the Tuist client fell back to locally-cached modules. At worst, this incident manifested as time-outs on cache fetches, with the client URLSessionConfiguration set up to kill sessions after 5 minutes. This blocked CI builds and newly-onboarding developers who needed to fetch from the cache.

For what it’s worth, I’ve used Tuist at work and their team responds (and usually unblocks us) very fast on Slack anytime there’s a problem.

Firefighting mode.

Well, mostly.

Pedro was in a taxi to the hospital for surgery* as this all went down, but the 3-strong engineering team coordinated the response over Slack.

*Sick days are one of the casualties of becoming a founder 🥲

The team bought themselves time by upgrading their server instance to a chunkier one with more memory.

With a minute to breathe, they found a straightforward change to the queue configuration that let it fail fast instead of re-queueing infinitely and clogging up memory. Basically: “if network issues happen again, cascade them down to the client instead of re-queueing on the server”.

Here’s what the Postgres DB config looked like in production with this fix; check out the pool_size and queue_target parameters.

[

ssl: [

server_name_indication: ~c"db.tuist.dev",

verify: :verify_none

],

pool_size: 40,

queue_target: 200,

queue_interval: 2000,

database: "cache_db",

username: "pedro",

password: "123456", # *

hostname: "db.tuist.dev",

port: 5432,

socket_options: [keepalive: true],

parameters: [

tcp_keepalives_idle: "60",

tcp_keepalives_interval: "30",

tcp_keepalives_count: "3"

]

]*I am about 80% sure this isn’t the real password.

But WTF was going on?!

The Route of all Evil

The first warning signs started bleeding through in early 2025, but the sh*t truly hit the fan in October. Fortunately, there was a months-long breadcrumb trail of logs and errors spanning the entire cache server that led to the ultimate conclusion:

Lo and behold, it was absolutely the cloud provider’s fault.

Tuist spun up a new machine on Render.com, routed some traffic over, and the problem was gone. The new server was faster and seemed more reliable. Switching was a no-brainer.

Pedro has not been paid to endorse Render.com, but he does really like it.

The memory and queue configuration bought the team some time. Updates were shipped to the client CLI in the meantime that made the service issues less disruptive, for example, skipping some binary cache fetches when requests were slow.

This ultimately gave them breathing space to plan out a migration, moving their entire caching infra to the new-

-Wait, I’m told they got it shifted in just under a day. Nice.

The team used Cloudflare to gradually route caching traffic to the new service to gain confidence, and once things were up and running with no issues, the original service was shut down.

Memory and CPU were stable, responses were faster, and there were no more connection drops from timeouts.

Today, the caching server efficiently handles tens of thousands of requests per hour without breaking a sweat, using relatively minimal resources. All thanks to reliable underlying infra.

The original cloud provider, after months of ignoring complaints, finally declared an incident.

Too little, too late.

Lessons Learned

As iOS-developers-turned-founders, creating web infra at scale didn’t come naturally. Learning to do Hard Things the Hard Way leaves you with scars that make you a better engineer.

There’s a lot we can take away from this story.

Measure, measure, measure

Without data, you can’t set up alerts. Without alerts, you don’t know whether your system is working. Without those annoying daily connection drop warnings, the Tuist team would have been blind when the server finally fell over.

It’s tough to improve this when you’re building a devtool, because devs are particularly strict with their data, and reluctant to share. But it’s kind of the only way to improve a setup.

It’s especially important to keep an eye on the state of the network and the latency of requests, and doubly-so if an endpoint has high throughput. Every customer onboarded to Tuist introduced new spiky bursts of thousands of requests.

I also learned this the hard way, often finding out via a recruiter, or Twitter, that my Sign-in-with-Apple was busted during my first foray into startups.

Trust your gut

The team’s initial assumption was a problem with the cloud provider. With the patterns they found in the logs, it really appeared to be the only culprit.

The cloud provider successfully gaslit the Tuist team into thinking that maybe something was wrong with their network configuration.

It took a serious incident to discard their diagnosis and revisit the initial assumption.

Elixir & Erlang are a beast

When debugging CPU and memory, the Tuist team could open a shell terminal on the prod server. The Erlang runtime has a “fair scheduler” that allows critical traffic like SSH in to inspect what’s happening, even if the CPU is at capacity.

In a prod incident, this is functionally open-heart surgery. Other runtimes might block any kind of telemetry leaving the server and make debugging 10x harder, reinforcing the choice of Elixir.

Having recently melted my own VPS while building a Vapor app, this sounds like a dream. When my CPU hit capacity, I was locked out and forced to shut it off.

Design for failure

This is Erlang’s mantra.

The CLI wasn’t designed to recover from cache failures. Therefore, the incident was felt by some customers during the incident.

The Tuist team intends to embrace this mantra across all their tooling and server apps from now on: Things will break, so the system needs to be resilient to it. Design systems that can recover from failures.

The winners and losers from the October AWS outage only make this lesson more concrete.

Thanks again to Pedro Piñera Buendia for taking the time to share his War Story with me! You can try out Tuist yourself at https://tuist.dev

I’m hoping to make this a regular column on Jacob’s Tech Tavern. If you have a story you’d like to share, get in touch!